If you’re currently grappling with the task of automating Linux deployment on Hetzner bare metal servers, and you’re comfortable with Ansible, hetzner-bare-metal-ansible might be something that will save you hours of work. But let’s start with a brief backstory on how this Open Source project came into existence in the first place.

Introduction

In recent years, there has been a trend toward so-called cloud repatriation for various reasons: cost efficiency, data sovereignty, etc. Сompanies are seriously starting to think about moving off the cloud back onto bare metal, especially for development environments. For those or any other reasons, when companies start looking at bare metal, Hetzner is pretty much always on their radar.

As far as bare metal solutions are concerned, we and our customers often opt for Hetzner because of its value for the money. Recently, Hetzner has also been adding more and more services, such as its brand-new Object Storage. What’s great is that you can manage your infrastructure through those services directly without relying on third-party tools.

But here’s the catch: in migrating to Hetzner, SRE engineers find themselves having to figure out the challenge of automating the provisioning, installing, and configuring physical servers. With the major cloud providers, these automation challenges are pretty much non-existent, thanks to ready-made Terraform providers, Ansible playbooks, and other solutions. Unfortunately, there’s no official Terraform provider available for Hetzner Robot, a platform for dedicated root servers, colocation and more.

From our experience, setting up a server manually can easily eat up a few hours — especially if you don’t have clear guides to facilitate the post-installation stuff, like getting packages installed and joining servers to a Kubernetes cluster. What if you’re in need of deploying dozens, let alone hundreds, of bare metal machines?.. In this case, building your automated solution seems reasonable, yet it will also require a significant time investment.

The good news is that we’ve already tackled that for one of our clients. Now, we’re happy to release our tool as an Open Source project designed to reduce the manual efforts involved in deploying Linux-based bare metal servers to Hetzner.

Chosen technology and general approach

There are many Infrastructure-as-Code options out there, such as Ansible, Terraform/OpenTofu, Pulumi, and others. Unfortunately, with Hetzner Robot, you won’t find any official integrations for those. Luckily, we weren’t starting from scratch: we already had Ansible templates to set up everything we needed after the OS install. Thus, essentially, we just had to bridge the gap between server provisioning automation and our existing Ansible playbooks. With that in mind, we opted for Ansible as the IaC tool of choice.

Following this approach, we created an Ansible playbook that automates the whole process of setting up and configuring bare metal servers on Hetzner. So, what is it capable of doing? Here’s the rundown:

- Enabling and managing rescue mode via the Hetzner API.

- Installing the operating system and configuring servers using

installimage. - Autoselecting an SSH key to connect to the server in rescue mode.

- Configuring network interfaces, including both external and internal addresses.

- Installing all the required software packages.

- Support for Ubuntu 24.04 and Ubuntu 22.04.

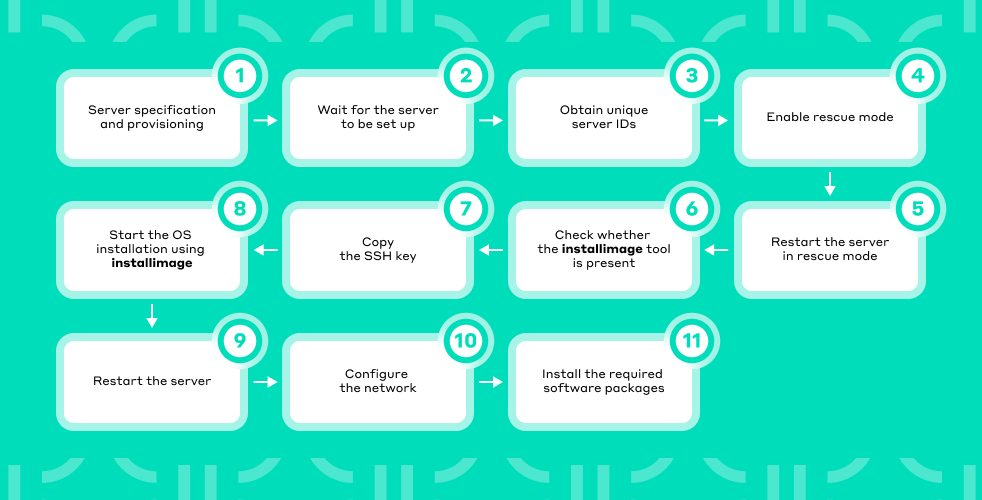

Steps for provisioning and configuring a server

The process of provisioning and setting up a bare metal server can be broken down into several key steps:

- Server specification and provisioning. First, we need to figure out the server specs, find suitable machines, and then order them.

- Wait for the server to be set up. Server installation may take some time, sometimes a few days.

- Obtain unique server IDs.

- Enable rescue mode. If rescue mode is disabled, you must enable it.

- Restart the server in rescue mode.

- Check whether the

installimagetool is present. We will use it to install the operating system. - Copy the SSH key from the local machine to the target server.

- Start the OS installation using

installimage. At this step, you can also configure the file system sinceinstallimageallows you to use extra flags for that. - Restart the server once the OS installation is complete.

- Configure the network. Following the reboot, connect to the server and configure both the external and internal networks.

- Install the required software packages and other essentials. In our particular scenario, this step entails adding users and copying their public SSH keys, and, when necessary, joining the node to the Kubernetes cluster environment.

Once these steps are complete, the server is good to go.

How our playbook works

Now, we’re ready to dive into each stage of the configuration and setup process for Hetzner bare-metal servers running Linux. As mentioned, our ready-to-use Ansible playbook is available in the hetzner-bare-metal-ansible repository on GitHub. Let’s take a closer look at its contents.

Repository layout

First, we’ll have a look at the repository structure. Here’s a quick tour of its files and directories:

README.md— project description, instructions for getting started and a list of existing feature flags.group_vars/all.yml— environment variable groups that set the default values for feature flags, such as additional network configuration and software package installation. They also contain credentials for working with the Hetzner API.host_vars/*.yml— files for individual host server configurations. They have the same structure as the variable groups and allow you to override settings on a per-host basis.inventory/hosts.ini— the list of hosts to install the OS and software packages to.playbook.yml— the main file to run and manage Ansible roles.- The

rolesdirectory includes:create_users, which adds OS users and their public keys.hetzner_bootstrapchecks and activates rescue mode, then reboots the server.install_osinstalls the operating system usinginstallimage, partitions the disks, and adds the SSH key.install_packagesinstalls additional packages from the given list.prepare_ssh_keychecks and creates, if necessary, the SSH key in the Hetzner Robot API to use later during server setup.set_cpu_governor_performancecreates a systemd unit to switch the CPU governor into theperformancemode.setup_networkconfigures the network.

Obtaining Hetzner API credentials

Our essential preparations for the desired automation include using the provider’s API. We need it for two tasks: enabling rescue mode on designated servers and rebooting them. Future iterations may extend our API usage to server provisioning. Still, given Hetzner’s server provisioning time, which can go on for days, we won’t prioritize that feature in the immediate term.

Refer to the Hetzner Robot documentation for up-to-date instructions on how to obtain credentials. We will use the hetzner_user and hetzner_password variables to work with the API in Ansible. After obtaining the API Token, add it to the environment variables in the group_vars/all.yml file.

# Hetzner API credentials

hetzner_user: "PASTE_YOUR_USERNAME"

hetzner_password: "PASTE_YOUR_PASSWORD"Choosing a distribution

Specify the Linux distribution you want to install in the group_vars/all.yml file.

os_image: Ubuntu-2404-noble-amd64-base.tar.gz- Find the official documentation on the

installimagetool that will be used to install the OS here. - We have tested the Ansible playbook on Ubuntu 24.04 and Ubuntu 22.04, as these are the systems we work with. However, you may have your own requirements and preferences.

- To find out which operating systems and Linux distributions you can install, look in the

/root/.oldroot/nfs/images/directory in rescue mode, or take a peek at the documentation.

Path to the SSH key

By default, we specify the ~/.ssh/id_rsa.pub path to the SSH key of the user who will be granted access to the installed operating system. But if you’d rather use a different key (either globally or for specific hosts), you can easily change the path in the ssh_public_key_path variable (in either the group_vars/all.yml or host_vars/*.yml file):

ssh_public_key_path: ~/.ssh/custom_key.pubAfter these preparations are accomplished, we’re ready to delve into the heart of our Ansible playbook—the roles and relevant tasks we will perform against each Linux server.

Ansible roles

#1: Prepare SSH key

The first step is to verify that the key exists at the path specified in the ssh_public_key_path parameter and retrieve its fingerprint in MD5 format.

- name: Ensure SSH key is available

ansible.builtin.stat:

path: "{{ ssh_public_key_path }}"

register: ssh_key_check

delegate_to: localhost

failed_when: not ssh_key_check.stat.exists

changed_when: false

tags: hetzner_bootstrap

- name: Get fingerprint from public key

ansible.builtin.command: ssh-keygen -E md5 -lf {{ ssh_public_key_path }}

changed_when: false

register: ssh_fingerprint

tags: hetzner_bootstrap

- name: Extract MD5 fingerprint

ansible.builtin.set_fact:

ssh_md5_fingerprint: "{{ (ssh_fingerprint.stdout | regex_search('MD5:([a-f0-9:]+)', '\\1'))[0] }}"

changed_when: false

tags: hetzner_bootstrapOnce the fingerprint is obtained, the next step is checking whether the key is registered in the account. If it is not found, it will be added.

- name: Check the key in robot

ansible.builtin.uri:

url: https://robot-ws.your-server.de/key/{{ ssh_md5_fingerprint }}

method: GET

user: "{{ hetzner_user }}"

password: "{{ hetzner_password }}"

force_basic_auth: true

headers:

Content-Type: application/json

status_code: [200, 404]

register: check_robot_key

tags: hetzner_bootstrap

- name: Create new key in robot to bootstrap the server

ansible.builtin.uri:

url: https://robot-ws.your-server.de/key

method: POST

user: "{{ hetzner_user }}"

password: "{{ hetzner_password }}"

force_basic_auth: true

body_format: form-urlencoded

body:

name: "ansible-key"

data: "{{ lookup('ansible.builtin.file', ssh_public_key_path) }}"

status_code: [201]

when: check_robot_key.status == 404

tags: hetzner_bootstrapWith the key setup complete, the process can move on to configuring the servers.

#2: Hetzner Bootstrap

To find out the mode the server is running in, just send a GET request to the Hetzner API at the relevant URL (i.e. https://robot-ws.your-server.de/boot/{{ server_id }}). Here, server_id is the unique identifier of your bare metal server. The full task is defined in the playbook as follows:

- name: Check current boot mode

uri:

url: <https://robot-ws.your-server.de/boot/>{{ server_id }}

method: GET

user: "{{ hetzner_user }}"

password: "{{ hetzner_password }}"

force_basic_auth: yes

headers:

Content-Type: application/json

status_code: 200

vars:

server_id: "{{ hostvars[inventory_hostname].server_id }}"

register: boot_mode_info

tags: hetzner_bootstrapThis task uses the hetzner_user and hetzner_password variables defined in the group_vars/all.yml file. Next, we run several checks on the API response, which include verifying the response code and its content.

- name: Debug API response

debug:

var: boot_mode_info

tags: hetzner_bootstrap

- name: Fail if API response is invalid

fail:

msg: "Invalid API response. Ensure the API endpoint and credentials are correct."

when: boot_mode_info.json is not defined

tags: hetzner_bootstrapFor diagnostic purposes, we also output the server status to tell if rescue mode or Linux mode is active:

- name: Debug current boot mode

debug:

msg:

- "Rescue Mode active: {{ boot_mode_info.json.boot.rescue.active }}"

- "Linux Mode active: {{ boot_mode_info.json.boot.linux.active }}"

when: boot_mode_info is defined and boot_mode_info.json is defined

tags: hetzner_bootstrapIf rescue mode is already enabled, we skip the activation step:

- name: Skip activation if Rescue Mode is already enabled

debug:

msg: "Rescue Mode is already active on server {{ server_id }}"

when: boot_mode_info.json.boot.rescue.active | bool

tags: hetzner_bootstrapIf rescue mode is disabled, we enable it by invoking the necessary API method (i.e. https://robot-ws.your-server.de/boot/{{ server_id }}/rescue):

- name: Activate Rescue Mode

uri:

url: <https://robot-ws.your-server.de/boot/>{{ server_id }}/rescue

method: POST

user: "{{ hetzner_user }}"

password: "{{ hetzner_password }}"

force_basic_auth: yes

headers:

Content-Type: application/x-www-form-urlencoded

body: 'os=linux&arch=64&authorized_key[]={{ SSH_MD5_FINGERPRINT }}'

status_code: 200

vars:

server_id: "{{ hostvars[inventory_hostname].server_id }}"

register: rescue_mode_response

when: not boot_mode_info.json.boot.rescue.active

tags: hetzner_bootstrapOnce rescue mode is activated, we restart the server:

- name: Reboot Server into Rescue Mode

uri:

url: <https://robot-ws.your-server.de/reset/>{{ server_id }}

method: POST

user: "{{ hetzner_user }}"

password: "{{ hetzner_password }}"

force_basic_auth: yes

headers:

Content-Type: application/x-www-form-urlencoded

body: "type=hw"

status_code: 200

vars:

server_id: "{{ hostvars[inventory_hostname].server_id }}"

when: not boot_mode_info.json.boot.rescue.active

tags: hetzner_bootstrap#3: Install OS

With rescue mode enabled and the server rebooted, we may proceed with installing Linux. The role that does this is defined in the roles/install_os directory. The first step is to make sure installimage is present in the /root/.oldroot/nfs/install/ directory.

- name: Check if installimage is available

ansible.builtin.command: /root/.oldroot/nfs/install/installimage -h

register: installimage_check

failed_when: installimage_check.rc != 0

changed_when: false

tags: install_osInstall the OS distribution using the installimage utility:

- name: Run installimage

ansible.builtin.command: |

/root/.oldroot/nfs/install/installimage -a \

-d nvme0n1,nvme1n1 \

-n {{ inventory_hostname }} \

-i /root/.oldroot/nfs/images/{{ os_image }} \

-p /boot/efi:esp:256M,/boot:ext3:1024M,/:ext4:all \

-r yes \

-l 1 \

-K "/root/.ssh/authorized_keys"

async: 1200

poll: 0

register: install_task

tags: install_osNote that we are not using file system partitioning parameters since all our servers are identical. If you want to add parametrization for this part, we recommend putting the default configuration into group_vars/all.yml, and the host-specific settings into host_vars/.

The last step is to wait for the installation to finish and then reboot the server:

- name: Wait for installation to complete

async_status:

jid: "{{ install_task.ansible_job_id }}"

register: install_status

until: install_status.finished

retries: 10

delay: 60

tags: install_os

- name: Reboot into installed OS

reboot:

reboot_timeout: 300

tags: install_os#4: Configuring network

We use two network interfaces: external and internal. The network parameters are set individually for each server, and those settings are stored in the host_vars/node.yml file. Here’s a sample configuration:

# Individual parameters

interface_config:

ethernets:

internal:

addresses:

- INTERNAL_IPV4_ADDRESS/SUBNET

external:

addresses:

- EXTERNAL_IPV4_ADDRESS/SUBNET

routes:

- to: 0.0.0.0/0

via: GATEWAY_IPV4

on_link: true

nameservers:

- DNS_SERVER1

- DNS_SERVER2Currently, we specify both addresses manually. If you want to enhance your Ansible playbook, you can fetch the external IPv4 address directly from the Hetzner API. As for the internal address, it can be assigned from a shared pool of addresses, for example, by querying Vault, an external database, and so on.

The netplan template is stored in roles/setup_network/templates/public_netplan_template.yaml.j2. We get the interface addresses using the following (a bit cumbersome) tasks:

- name: Gather all network interface information

ansible.builtin.command: ip link show

register: interface_info

changed_when: false

tags: install_net

- name: Get interfaces in UP state

ansible.builtin.shell: |

set -o pipefail

ip link show | grep -B1 'state UP' | grep -E '^[0-9]+:' | awk '{print $2}' | sed 's/://'

register: up_interfaces_output

changed_when: false

tags: install_net

- name: Get interfaces in DOWN state

ansible.builtin.shell: |

set -o pipefail

ip link show | grep -B1 'state DOWN' | grep -E '^[0-9]+:' | awk '{print $2}' | sed 's/://'

register: down_interfaces_output

changed_when: false

tags: install_net

- name: Set external and internal interfaces based on command output

ansible.builtin.set_fact:

external_interface: "{{ (up_interfaces_output.stdout_lines | first) | default('') }}"

internal_interface: "{{ (down_interfaces_output.stdout_lines | first) | default('') }}"

tags: install_netNote: In future, we should use Ansible’s built-in modules here rather than shell commands.

To make things more user-friendly, we print out the interface names using the debug function:

- name: Debug extracted interfaces

debug:

msg:

- "External Interface (UP): {{ external_interface }}"

- "Internal Interface (DOWN): {{ internal_interface }}"

tags: install_netThe next step is to copy our updated template and rename the interfaces to match the actual servers’ names:

- name: Configure network interfaces

template:

src: public_netplan_template.yaml.j2

dest: /etc/netplan/01-netcfg.yaml

tags: install_net

- name: Change name for internal interface

replace:

path: /etc/netplan/01-netcfg.yaml

regexp: '^\\s*internal:'

replace: ' {{ internal_interface }}:'

tags: install_net

- name: Change name for external interface

replace:

path: /etc/netplan/01-netcfg.yaml

regexp: '^\\s*external:'

replace: ' {{ external_interface }}:'

tags: install_netValidate the configuration and apply it:

- name: Validate netplan configuration

ansible.builtin.shell: netplan generate

tags: install_net

- name: Apply netplan configuration

ansible.builtin.shell: netplan apply

tags: install_net#5: Installing additional software

Once the Linux distribution is installed and the network is configured, we can proceed to install additional packages, following the tasks defined in roles/install_packages/tasks/main.yml:

- name: Install packages

become: true

ansible.builtin.package:

name: "{{ item }}"

state: present

with_items: "{{ packages_list }}"You can define the required software packages list in group_vars/all.yml:

packages_list:

- curl

- jq#6: Create local OS users with SSH keys

At this stage, we create local users with sudo privileges according to the users_list defined in group_vars/all.yml. Each user is assigned a default password, which must be changed upon first login.

Additionally, we copy the user’s public SSH key to the system. To ensure proper setup, public keys for all users must exist in the roles/create_users/files directory and be named as {{ SYSTEM_LOGIN }}.pub.

- name: "Sudo users: Create"

ansible.builtin.user:

name: "{{ item }}"

groups: "sudo"

password: "{{ users_default_password }}"

update_password: on_create

with_items: "{{ users_list }}"

when: (ansible_distribution == 'Ubuntu')

notify: Enforce new user to change password on first login

- name: "Sudo users: Add authorized keys"

ansible.posix.authorized_key:

user: "{{ item }}"

key: "{{ lookup('file', 'files/' + item + '.pub') }}"

with_items: "{{ users_list }}"

ignore_errors: true

register: user_changed#7: Ensuring maximum CPU performance mode

Last but not least, we must create a systemd unit to put the CPU to its best mode after each reboot. If we don’t do that, the default operating modes may vary. In our experience, they will most likely be set to powersave or schedutil, which will lead to unwanted performance issues in various applications. Hence, we have the following Ansible tasks:

- name: Copy script

ansible.builtin.copy:

src: set-cpufreq.sh

dest: "/usr/local/bin/set-cpufreq.sh"

mode: '0755'

- name: Create systemd unit to set CPU governor to performance

ansible.builtin.copy:

src: set-cpufreq.service

dest: "/etc/systemd/system/set-cpufreq.service"

mode: '0644'

- name: Enable and start systemd unit

ansible.builtin.systemd:

name: "set-cpufreq"

enabled: true

state: started

daemon_reload: trueWhat’s next?

We’ve already used this Ansible playbook in our projects, and it gets the job done. But, you know, nothing’s perfect, and we see at least a couple of things we’d like to improve.

1. Automatic joining of nodes to Kubernetes clusters

We use Kubernetes a lot and see significant value in automating the process of connecting new servers to an existing Kubernetes cluster. We are currently using a different automation for our projects, so we haven’t included it in the playbook yet.

2. Linting errors

The existing implementation doesn’t quite follow Ansible’s best practices. In particular, we need to ditch shell commands to get network interface names (and switch to Ansible’s built-in modules) and make sure we use FQCN for modules, just as is recommended. These improvements will not only enhance the code quality but also ensure its flexibility and portability.

Conclusion

We’ve successfully used Ansible to deploy our Linux servers in Hetzner. It helped us cut down the manual work we had to do, speed up the process, render it more scalable, and minimize the number of errors that occurred when dealing with bare metal servers.

We decided to share our results with everyone and have open-sourced them as hetzner-bare-metal-ansible on GitHub. Hopefully, it will come in handy for other SRE engineers tackling similar tasks, and with the help of the community, we can render it even more robust and adaptable. Let us know what you think, and feel free to open pull requests! We’d love your feedback.

Comments