AI nowadays is a hot topic everywhere, and the Kubernetes-powered DevOps world is no exception. It seems to be quite organic for software engineers who, in their nature, are huge automation enthusiasts. Thus, in light of all the hype that’s coming from ChatGPT, relevant projects for Kubernetes operators are beginning to pop up, too. Let’s see which Open Source tools, backed by OpenAI & ChatGPT, have recently surfaced to make the K8s operators’ lives easier. Most of them are designed for terminal (CLI) usage.

UPDATE: This article was revised in January 2024 to reflect the recent changes in the AI-related Kubernetes ecosystem.

Troubleshooting K8s with AI

1. K8sGPT

- “A tool for scanning your Kubernetes clusters, diagnosing, and triaging issues in simple English”

- Website: http://k8sgpt.ai/

- GitHub: https://github.com/k8sgpt-ai/k8sgpt

- GH stars: 4100 (+1000 since August’23)

- First commit: Mar 21, 2023

- 900+ commits, 45 releases, ~60 contributors

- Language: Go

- License: Apache 2.0

Launched by Alex Jones and marketed as “Giving Kubernetes Superpowers to everyone,” K8sGPT is the best-known, most prominent project of its kind. Moreover, it became a CNCF Sandbox project in December 2023.

Main features

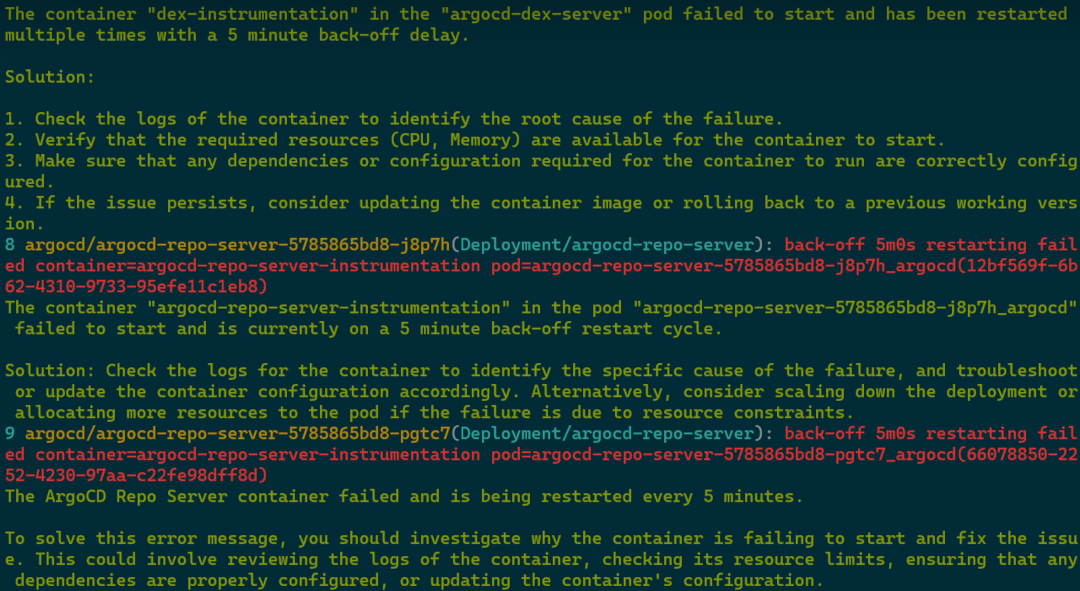

K8sGPT is a CLI tool with a primary command, k8sgpt analyze, designed to reveal the issues going on within your Kubernetes cluster. To achieve this, it uses so-called “analyzers”, which define the logic for each K8s object and the possible problems it may be encountering. E.g., an analyzer for Kubernetes Services will check whether a particular Service exists and has endpoints at all as well as whether its endpoints are Ready.

Identifying such an issue in and of itself is not a big deal, but the magic is hidden deeper. The magic happens when you ask K8sGPT to explain what you can do about your existing issues — this is performed by executing k8sgpt analyze --explain. The command will ask the AI for instructions for your specific case and display them for you. These instructions will include the actions to perform your troubleshooting, including specific kubectl commands you need to execute via simple copy & paste, thanks to the fact that your Kubernetes resources’ names are already in place.

Speaking of mentioning your actual resources’ names, K8sGPT boasts an anonymization feature (--anonymize flag for the k8sgpt analyze command), which will prevent sensitive data from being sent to the AI system. Helpful, isn’t it? As of right now, it is not yet implemented in all the analyzers though.

When you run analyze --explain, there’s also an interactive mode available (the --interactive flag enables it). Using this mode, you can continue your conversation with AI regarding the problem you’re experiencing and the solution it offers.

K8sGPT features built-in analyzers for numerous Kubernetes objects, including Nodes, Pods, PVCs, ReplicaSets, Services, Events, Ingresses, StatefulSets, Deployments, CronJobs, NetworkPolicies, and even HPA and PDB. One of its recent additions is an early implementation of the log analyzer. It should not be too difficult to extend this set by creating your custom analyzers, too.

Supported AI backends & models

Another benefit is that K8sGPT is not limited to a single AI system. Yes, OpenAI is the default AI provider, giving you access to the well-known GPT-3.5-Turbo and GPT-4 language models. However, you can choose among numerous other AI providers as well, which currently (as of January’24) include:

- Azure OpenAI;

- LocalAI — a local model with OpenAI-compatible API (e.g., you can use it with llama.cpp and ggml — this will allow you to leverage the AI even in air-gapped environments);

- FakeAI — for simulating AI system behavior without actually invoking it;

- Cohere (added in July 2023);

- Amazon Bedrock (added in October 2023) — a fully managed service from AWS;

- Amazon SageMaker (added in November 2023) — allows you to leverage self-deployed and managed LLMs;

- Google Gemini models (added in January 2024).

Integrations with Trivy and Prometheus

K8sGPT also features an API for integrations, which allows you to leverage external tools, invoking their capabilities to get even more insights regarding your Kubernetes cluster’s state and potential issues. Currently, there are two integrations.

The first one is for Trivy, the well-known Open Source security scanner. Upon enabling it via k8sgpt integration activate trivy (assuming that Trivy Operator is installed inside your cluster), you will get two new filters:

- VulnerabilityReport — to discover vulnerabilities within your Kubernetes cluster;

- ConfigAuditReport — to check your cluster components’ configuration against common security standards.

To access the scanner’s output, you can execute k8sgpt analyze --filter VulnerabilityReport.

When the second integration is activated, it detects a running Prometheus in your cluster and provides you two filters:

- PrometheusConfigValidate — to see whether your Prometheus configuration is correct and, if it’s not, what you can do to fix it;

- PrometheusConfigRelabelReport — to analyze your Prometheus relabeling rules and report which groups of labels are needed by your targets for scraping.

Installation options

Since K8sGPT is a CLI tool, you can simply download and install the binary. Prebuilt packages for various Linux distributions (Red Hat/CentOS/Fedora, Ubuntu/Debian, Alpine), macOS (brew), and Windows are available.

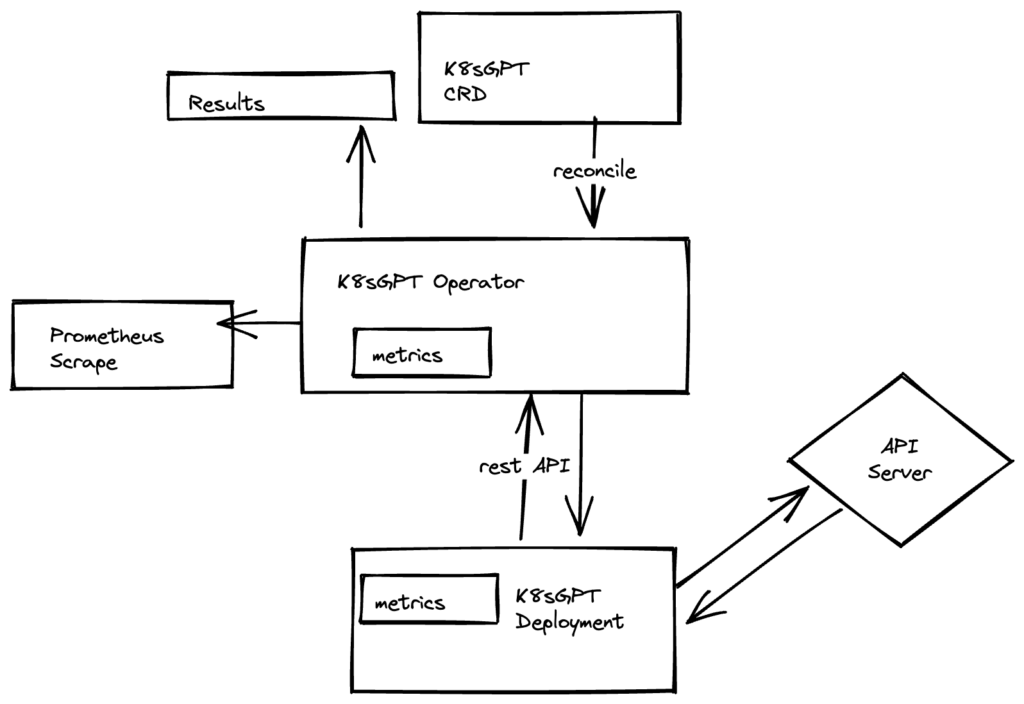

Last but not least K8sGPT feature is that you can install it as a Kubernetes operator inside your cluster. To do so, use the Helm chart provided here. After installing it and applying the K8sGPT configuration object (kind: K8sGPT), your cluster will be analyzed by the tool with the scan results stored in the Results objects. That means you will be able to see them by executing kubectl get results -o json | jq .

Summary

K8sGPT has already generated impressive community interest and for a good reason! Initially focused on troubleshooting your Kubernetes issues, this project grows fast and attains more features that help you operate K8s clusters. Its future seems genuinely bright thanks to being flexible with leveraging different AI systems and extensible to benefit from custom analyzers and third-party tools integration.

2. Kubernetes ChatGPT bot

- “A ChatGPT bot for Kubernetes issues”

- GitHub: https://github.com/robusta-dev/kubernetes-chatgpt-bot

- GH stars: over 900 (no significant changes since August’23)

- First commit: Jan 10, 2023

- ~40 commits, ~10 contributors

- Language: Python

- License: not specified

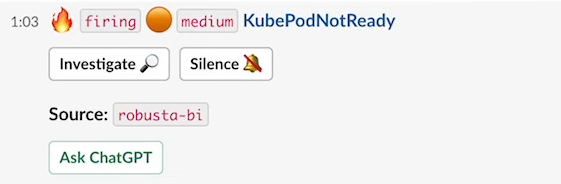

Created by Robusta, this project focuses on troubleshooting Kubernetes issues by integrating AI with your alerts displayed in Slack.

To leverage this bot, there are specific requirements you will have to follow:

- have or be ready to install Robusta on top of Prometheus (VictoriaMetrics is also supported) and AlertManager;

- use Slack.

If everything is in place, you will already have your monitoring alerts sent to Slack via an incoming webhook. This bot adds an “Ask ChatGPT” button to your alerts in Slack. Thus, clicking on it will query the AI (using your OpenAI API key) and bring you its response, instructing you on possible actions to mitigate the issue that caused this alert.

As of right now, it’s as simple as that. However, the author suggests a possible further improvement on the way it works by supplying additional data — such as Pod logs and kubectl get events output — to the AI. If it piques your interest, third-party contributors are welcome on GitHub.

UPDATE: As of January’24, this project didn’t get any updates since August’23.

Kubectl AI-powered plugins

3. kubectl-ai

- “Kubectl plugin for OpenAI GPT”

- GitHub: https://github.com/sozercan/kubectl-ai

- GH stars: about 950 (+150 since August’23)

- First commit: Mar 20, 2023

- ~50 commits, 11 releases, ~10 contributors

- Language: Go

- License: MIT

This project was launched the day before K8sGPT was born. However, its idea of applying the power of AI to Kubernetes is entirely different. Motivated by avoiding “finding and collecting random manifests when dev/testing things,” the author wanted to simplify Kubernetes resources’ manifests generation.

Installed as a kubectl plugin, kubectl-ai features the kubectl ai command. You can use it to obtain ready-to-use YAML manifests from AI based on your needs. Here’s a self-explanatory example from its README:

kubectl ai "create an nginx deployment with 3 replicas"

Attempting to apply the following manifest:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

Use the arrow keys to navigate: ↓ ↑ → ←

? Would you like to apply this? [Reprompt/Apply/Don't Apply]:

+ Reprompt

▸ Apply

Don't Apply

Attempting to apply the following manifest:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

Use the arrow keys to navigate: ↓ ↑ → ←

? Would you like to apply this? [Reprompt/Apply/Don't Apply]:

+ Reprompt

▸ Apply

Don't ApplyThe “reprompt” option allows you to refine the resulting manifest by changing specific parameters. You can generate a few manifests at once, which makes sense for interrelated objects, such as Deployment and Service. When you’re happy with what’s suggested, you can apply it to your cluster with ease.

You can subsequently also modify your existing Kubernetes objects by asking kubectl ai to scale them or change other parameters.

As for the AIs, kubectl-ai supports OpenAI API, Azure OpenAI Service, and LocalAI for airgap cases (a recent addition merged on July 31st, 2023). While GPT-3.5-Turbo serves as its default language model, GPT-4 is supported as well.

UPDATE: In January’24, the kubectl-ai documentation was extended, with AIKit support being mentioned as another way to use local LLMs. AIKit is a new project from the same author aiming to simplify building and deploying LLMs leveraging LocalAI and Docker’s BuildKit under the hood. There are no other changes added to kubectl-ai since August’23.

4. kubectl-gpt (not active)

- “A kubectl plugin to generate kubectl commands from natural language input by using GPT model”

- GitHub: https://github.com/devinjeon/kubectl-gpt

- GH stars: over 50 (+10 since August’23)

- First commit: May 29, 202

- ~20 commits, 3 releases, 1 contributor

- Language: Go

- License: MIT

This plugin introduces the kubectl gpt command, whose sole mission is to make your wishes — i.e. requests stated in human language — come true in your Kubernetes cluster. Here are examples of what you can expect from this plugin as outlined in its documentation:

kubectl gpt "Print the creation time and pod name of all pods in all namespaces."

kubectl gpt "Print the memory limit and request of all pods"

kubectl gpt "Increase the replica count of the coredns deployment to 2"

kubectl gpt "Switch context to the kube-system namespace"The outcome can be both informative output only and real actions that are affecting your K8s resources. Either way, it will execute a command, but first, it will display this command so you can see it and confirm that you’re happy to carry on with it. You can also disable these features (printing a generated command/asking for confirmation) if you wish.

Kubectl-gpt requires an OpenAI API key to work. GPT-3 only is supported, with GPT-3.5-Turbo enabled by default. Any human languages that are supported by OpenAI GPT API can be used.

This project was developed by a solitary enthusiast and hasn’t seen any updates since the end of May. UPDATE: This is still valid in January’24; thus, kubectl-gpt isn’t being developed anymore.

5. mico (not active)

- “An AI assisted kubectl helper”

- GitHub: https://github.com/tahtaciburak/mico

- GH stars: over 30

- First commit: March 22, 2023

- ~10 commits, 1 contributor

- Language: Go

- License: MIT

Similarly to kubectl-gpt, mico generates kubectl commands based on your input written in a natural language. To do so, it leverages the OpenAI’s GPT-3.5 and requires setting the OpenAI API key (it can be done via mico configure).

In addition to the initial configuration, this tool offers you the only command (-p or prompt). An example of how you can use it from the documentation explains that:

mico -p "Get the version label of all pods with label app=cassandra"… will result in displaying this command:

kubectl get pods -l app=cassandra -o jsonpath='{.items[*].metadata.labels.version'… which you can also send to a pipe straight away by simply executing mico -p … | bash.

Unfortunately, mico was created and developed in March 2023 and has never been updated since that time. Thus, we have to add it to the list of projects that are not active anymore.

AIOps multi-tools for Kubernetes

All the projects described in this category were launched at about the same time. They also share similar ideas, providing the user with various AI-assisted features while they are operating a Kubernetes cluster. All of them also feature similar stats: one or just a few contributors, roughly 100 stars, and dozens of commits. Let’s see what they offer and how they differ.

6. kube-copilot

- “Kubernetes Copilot powered by OpenAI”

- GitHub: https://github.com/feiskyer/kube-copilot

- GH stars: over 100 (+40 since August’23)

- First commit: Mar 25, 2023

- ~130 commits, 12 releases, 2 contributors

- Language: Go

- License: Apache 2.0

Kubernetes Copilot brings the multi-tools feature set to the next level. In addition to Kubernetes troubleshooting, auditing, and perform-any-action functionality, this multi-tool can also generate manifests based on your prompt (like kubectl-ai does).

By the way, auditing in kube-copilot is more powerful than you might expect. While the tool has the “analyze” command to reveal possible issues in your K8s resources (the way kopilot audit does), it also features the “audit” command. The latter leverages the Trivy scanner to look specifically for security problems that your Pods might have.

The last feature to mention is that this tool is happy to… google for you right in the terminal. Well, perhaps there are some questions that might be better solved this way rather than in ChatGPT. It doesn’t sound like the most important thing for the K8s-related tool, in my opinion, though.

As for the AI support, kube-copilot works with your OpenAI API key or Azure OpenAI Service. It allows you to use both the GPT-3.5 and GPT-4 (default) models.

UPDATE (January’24): Initially, kube-copilot offered a web UI in addition to the CLI one, but it was removed with its v0.5.0 release published in early January 2024. That happened at the exact moment when its code was fully rewritten in Go instead of Python 3 used originally.

Despite this tool being mainly developed by a solitary individual, its commits’ history is consistent and, therefore, promising. There is neither a public roadmap nor any issues that will shed light on how the tool will evolve, though.

7. kopilot (not active)

- “Your AI Kubernetes expert”

- GitHub: https://github.com/knight42/kopilot

- GH stars: about 170 (+30 since August’23)

- First commit: Mar 19, 2023

- ~40 commits, 3 releases, 3 contributors

- Language: Go

- License: MIT

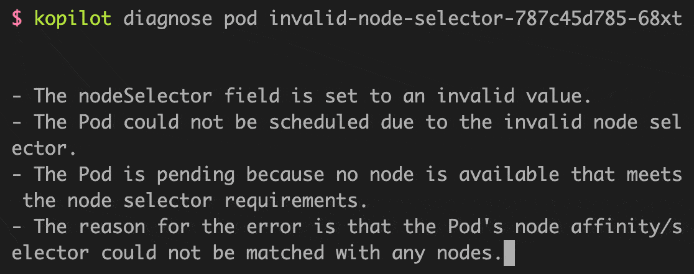

Kopilot is the only one out of these 3 projects written in Go. It covers two functions: troubleshooting and auditing. So what do they do?

- Imagine you have a Pod that is stuck in Pending or CrashLoopBackOff. This is when the

kopilot diagnosecommand will come in handy. It will reach AI for help and print you its answer with possible explanations as to why this has happened.

- Not sure if your Deployment is good enough? The

kopilot auditcommand, using a similar approach, will check it against the well-known best practices and possible security misconfigurations.

This tool will use your OpenAI API token and the human language of your choice for the answers. The README also hints that there will be an option to use other AI services in the future.

Sadly, the project hasn’t seen any commits since the beginning of April’23, raising obvious concerns. UPDATE: This is still valid in January’24; thus, kopilot isn’t being developed anymore.

8. kopylot (not active)

- “An AI-Powered assistant for Kubernetes developers”

- GitHub: https://github.com/avsthiago/kopylot

- GH stars: over 120 (+50 since August’23)

- First commit: Mar 28, 2023

- ~70 commits, 5 releases, 2 contributors

- Language: Python

- License: MIT

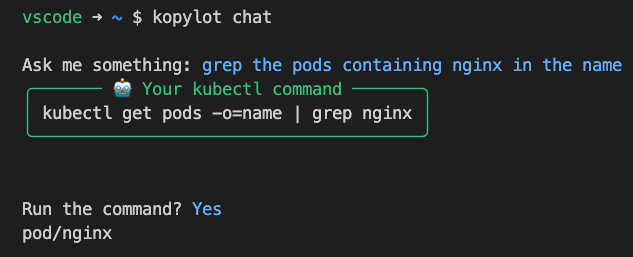

This tool has similar audit and diagnose functions and goes one step further by providing the “chat” command. It brings you a very specific chatbot experience: you can ask in English for a particular action that will be transformed into a kubectl command. If the command it prints seems fine, you can confirm its execution. That’s what we’ve seen in kubectl-gpt.

Kopylot also offers the “ctl” command, a simple wrapper for kubectl allowing you to execute any command directly, i.e. without any AI intervention. This feature seems to aim to make kopylot your best friend while working with Kubernetes instead of the good old kubectl, which is still always available, just in case.

Currently, kopylot supports the OpenAI API key only and can’t function with any other human languages. It relies on the text-davinci-003 GPT-3.5 model (it’s hardcoded), which is considered legacy. The support for using other LLM models is mentioned in the project’s roadmap, though.

The chances it will happen are dubious since its latest release is dated April 4th, 2023. UPDATE: This is still valid in January’24; thus, kopylot isn’t being developed anymore.

Others

There are more OpenAI-based tools & services for Kubernetes and its ecosystem, which I’d like to mention in this article as well.

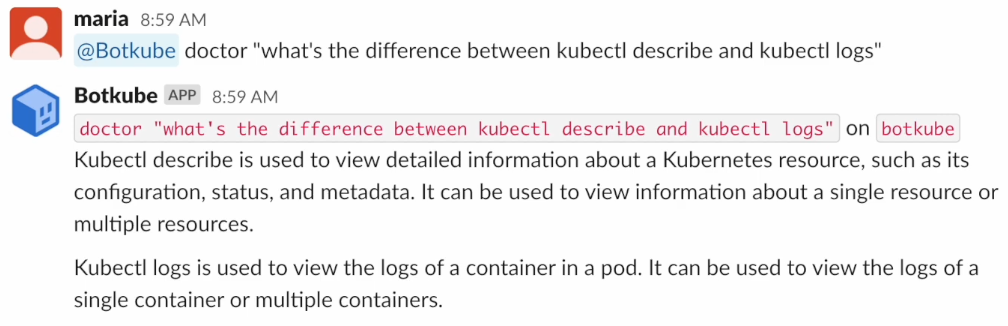

Botkube by Kubeshop is a messaging bot (i.e. ChatOps) for monitoring and debugging Kubernetes clusters in Slack, Mattermost, Discord, or Microsoft Teams. Its recently added Doctor plugin connects with the AI in two different ways: 1) directly asking the chatbot any questions you have; 2) using the “Get Help” button, which appears just below the error events. The bot will reply with AI-generated recommendations regarding your question or specific issue. An OpenAI API key is required to enable this Botkube plugin.

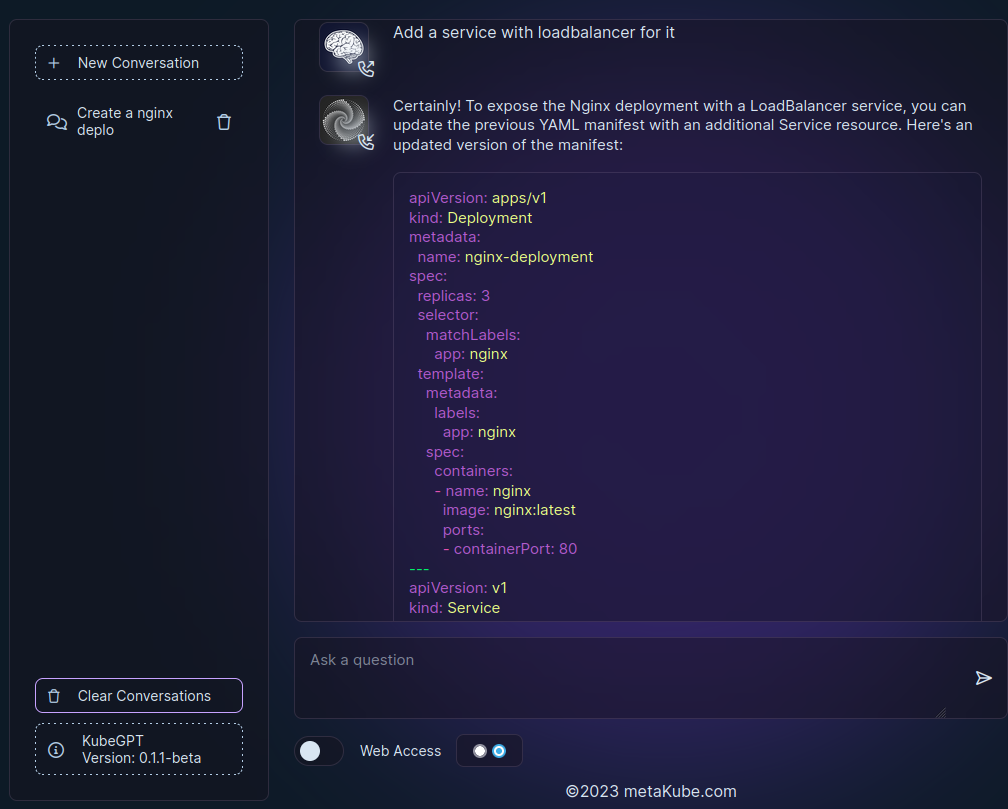

KubeGPT by metaKube is not a CLI tool but an online chat available in the web browser to talk with the AI about Kubernetes. Just like regular ChatGPT, it can answer general questions (e.g., Kubernetes architecture or some best practices) as well as generate specific YAML manifests for you.

Actually, it was okay to help me with other tech issues as well. For example, it didn’t mind giving information on Nomad. It even provided me with guidance on installing Ubuntu Linux. However, it anticipated that “its expertise lies in Kubernetes,” so, formally, it should not assist much with inappropriate requests. This service is currently in the beta phase.

MagicHappens is a PoC operator that is “meant for fun and experimentation only.” It defines a new CRD (kind: MagicHappens) that lets you describe tasks in a human language — e.g., “create such a namespace and such deployment there”. When the operator receives a YAML manifest with this description, it sends it to OpenAI to get a relevant YAML and applies this resulting manifest to your cluster. Note that there have been no new commits to this project since April 2023.

Kube or Fake is a just-for-fun online service that gives you five Kubernetes terms generated by ChatGPT. Since some of them are real and others are fake, your task is to guess that correctly.

Blazor k8s is a web UI written using the C# Blazor framework to manage Kubernetes, backed by OpenAI assistance. As of January’24, this project is actively developed by a single enthusiast. However, since its interface and documentation are in Chinese, it’s hard to provide more detailed information at the moment.

Not just Kubernetes tools

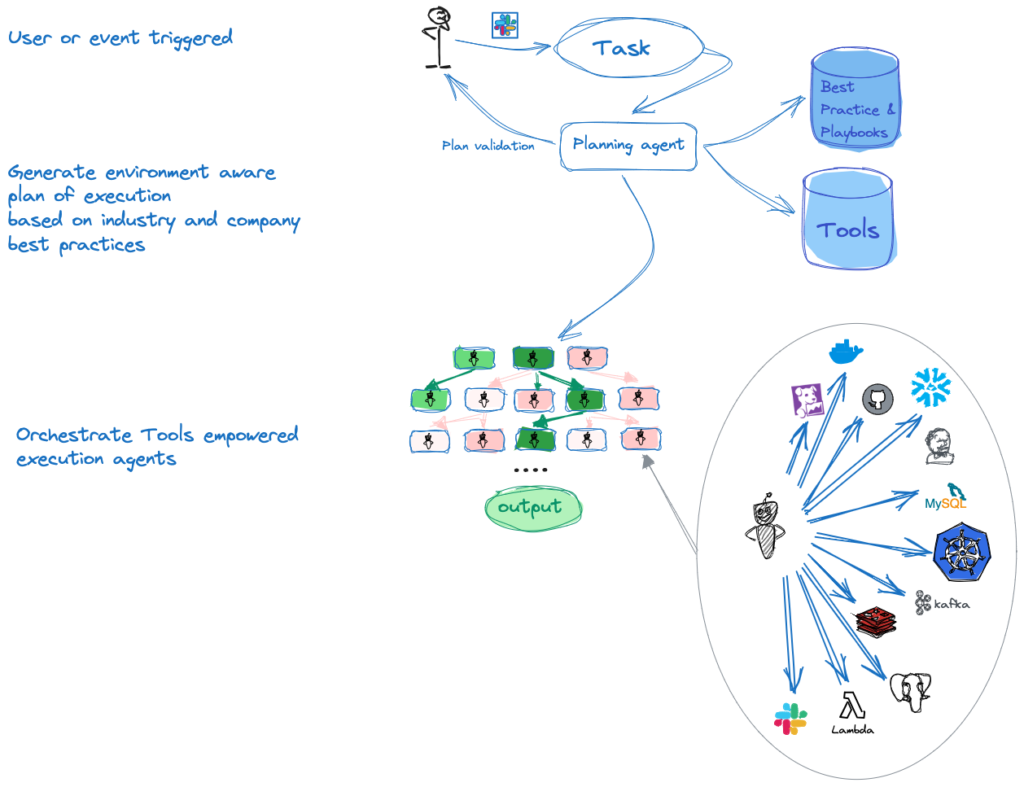

GeniA, dubbed “Your Engineering Gen AI Team member,” is a promising ChatOps solution capable of performing various DevOps- and SRE-related tasks as requested in Slack.

For example, it helps you deploy apps to Kubernetes via Argo, trigger a new Jenkins build, scale K8s resources, troubleshoot production incidents, create new cron jobs, perform security vulnerability checks, or even create cloud usage reports! (Find more cases, some illustrated with videos, here.)

GeniA requires an OpenAI API key or an Azure OpenAI account. It is written in Python and Open Source (Apache 2 license). Being actively developed by two contributors in August-September 2023, there was little activity lately. However, with the latest commit made at the end of November 2023, hopefully, GeniA is not abandoned yet.

After all, more than 300 users have starred the project’s repo and might have some expectations of its bright future!

Appilot by Seal Inc. is an experimental project to operate your infrastructure and applications using GPT-like LLMs. It has plugins for Kubernetes and Walrus, another Open Source project from the same company that aims to be an enterprise-level XaC (Everything as Code) platform by simplifying application deployment and management on any infrastructure. Appilot can work with an OpenAI API key with GPT-4 access or with other LLMs via OpenAI-compatible API. It allows you to specify the natural language of your choice. It is written in Python and, currently (January’24), has 100+ commits made by a sole developer and boasts 100+ GitHub stars.

Finally, it is no surprise that various well-known tools in the Kubernetes-related ecosystem have been recently embedding OpenAI-powered features, too. There are actually quite a lot of them already, so I don’t even promise that I’ll list all of them. However, here are the examples I’ve seen so far — not necessarily Open Source, but at least directly related to well-known Open Source projects — presented in chronological order:

- ARMO Platform, based on Kubescape, can generate custom controls based on your request specified in a human language and then processed by GPT-3. (February 2023)

- falco-gpt generates remediation actions for Falco audit events based on the OpenAI recommendations and sends them to Slack. (April 2023)

- KubeVela Workflow allows you to use OpenAI API for validating your Kubernetes resources, ensuring their satisfactory quality, etc. (April 2023)

- Monokle by Kubeshop achieved an AI-assisted YAML resource creation, letting you leverage the AI to generate YAML manifests based on your prompt and validation policies. (June 2023)

- Fairwinds Insights gained the ChatGPT integration to generate OPA (Open Policy Agent) policies, powered by OpenAI. (June 2023)

- Portainer introduced experimental support for ChatGPT in its Business Edition v2.18.3, which can provide ready-to-use answers on how to deploy an app. (July 2023)

- Argo CD got an AI Assistant in the Akuity Platform. Powered by OpenAI API, it helps to detect certain issues and analyze logs, performs the actions you request it to, and answers your questions. (July 2023)

Summary

Has AIOps delivered us to the promised land yet? While there’s definitely room for improvement, the Open Source ecosystem already has viable options to offer Kubernetes administrators & users:

- K8sGPT is the most successful project overall since it has attracted numerous contributors and users. Currently focused on troubleshooting, it’s very flexible and extensible, so I’m excited to see what its authors and growing community will bring in the foreseeable future. It seems natural and promising that now it’s a CNCF project.

- Botkube by Kubeshop, ChatGPT bot by Robusta, and even GeniA are good ChatOps-style options if you want to simplify processing your monitoring alerts in Slack by adding AI-generated thoughts on what’s going on and how to fix it.

- Try kubectl-ai or kube-copilot if you need to automate your YAML manifests generation.

The whole AIOps thing is currently exploding. Many projects we can see today will disappear, while many new ones will emerge and become an integral part of the cloud-native ecosystem. I hope this article was helpful in providing an overview of the Open Source landscape we currently have.

P.S. This article was written in an old-fashioned way, entirely manually, with no ChatGPT involved.

Great article! Thanks for the Botkube feature

NONE of these tools are “ChatGPT-based”, there are OpenAI based, it’s different because ChatGPT is a chat implementation over OpenAI which store and keep your data to train it. please be rigourous on the naming because it’s totally none the same thing

Thanks a lot for your valid concern, Bob! I just made a few corrections to improve the respective phrases in the article.

You rocks. Your article is great, I’m now trying all these tools one by one