This article briefly discusses the topic of serverless computing (what it is and why it’s necessary), delves into the available Open Source self-hosted frameworks that support Kubernetes, and explores their features and limitations. Subsequently, read on to learn about the real-life serverless experience (OpenFaaS) of one of our customers (It was while I was researching that case that I came up with the idea of this review, to be frank).

UPDATE (29/04/2022): Some facts considering OpenFaaS were misrepresented in the original version of this article. They are corrected now.

Briefly about serverless computing

Serverless architecture is a strategy focused on dividing a product down into smaller chunks and running them on the infrastructure as standalone units: functions (hence the term FaaS, Function-as-a-Service). The serverless approach is seen as the next evolutionary step in the development process: monolith → microservices → functions.

The REST API is probably the most obvious example of the functionality that can be easily migrated to serverless. The REST API is stateless; it depends only on the client data in the request. That means that you can easily turn it into mini-applications (functions) for each endpoint and deploy them in a special environment that can process client requests. What you will end up with is a unified system that is pretty easy to scale and update.

The advantages of FaaS services include:

- Ease of running;

- Dynamic scaling of workers handling client requests;

- The ability to be charged only for the resources consumed.

However, there are some drawbacks that come with it as well:

- Cold start: it takes some time to get started or increase the number of workers when the load changes;

- Stateless-only mode is not suitable for all use cases because you cannot store the state of a particular function;

- Vendor lock-in and cloud-specific features may result in functional (timeouts) and financial restrictions.

How it all began

AWS pioneered serverless computing in 2014, introducing the Lambda platform. Despite the initially meager choice of programming languages, Lambda has managed to prove the approach’s viability and become a leader in the field. According to CNCF’s State of Cloud Native Development report, as of Q1 2021, 54% of respondents were using it.

In 2016, Microsoft launched Azure Functions (35% of respondents). In 2017, Google introduced its own Google Cloud Functions (41% of respondents). Today, AWS Lambda, Google Cloud Functions, and Azure Functions have become mature, production-ready solutions actively used by various companies.

Alongside them, Open Source self-hosted serverless frameworks have emerged as well. The latter will be the focal point of this review.

Self-hosted solutions

The emergence of serverless frameworks that can be hosted on any infrastructure happened to coincide with the growing popularity of both Docker and Kubernetes. The surge of interest in self-hosted serverless took place in 2016-2018. Iron Functions, Funktions, and OpenFaaS were introduced in 2016; Kubeless emerged in 2017; and 2018 was the year of Knative and OpenWhisk.

Self-hosted frameworks are designed to address some of the downsides of their hosted counterparts, such as vendor locks and a limited selection of programming languages. On top of that, you can run them on your own hardware.

From a technical point of view, the logic of the self-hosted frameworks involves packing a function into a Docker image and running it on the orchestration platform as a container. This makes the process universal but nevertheless imposes some limitations. For example, the fundamental idea of serverless – fast scaling – falters: you have to keep the resources that the functions are going to use ready at all times. The development and maintenance process is also rendered more complex than the microservice architecture.

Unfortunately, not all self-hosted solutions have passed the test of time:

- Kubeless, Iron Functions, Space Cloud, OpenLambda, Funktion, and Fn Project seem to be mostly “dead” nowadays.

- OpenWhisk could be said to be alive, but it has not had a single release since November 2020. This framework is barely mentioned, especially in the context of a self-hosted Kubernetes solution (the CNCF report for 2020 shows that only 3% of serverless users use it).

- OpenFaaS seems to be progressing well and is quite popular in the community (10% of users favor it).

- Knative is thriving and looks to be the most promising overall (27% of users choose it). Version 1.0 was released in November 2021.

- Fission has regular releases (the most recent one was on December 29, 2021), but it is not widely used (2% of users).

- Nuclio has its own Open Source version. However, it lacks an auto-scaling feature in general and scaling to zero specifically, thus it won’t be subsequently considered in this article.

Let’s take a closer look at the most promising frameworks.

OpenFaaS

OpenFaaS is one of the oldest projects created by a well-known cloud-native enthusiast, Alex Ellis. You can learn more about the history of the framework in this talk.

In addition to the free version, OpenFaaS Ltd — the company behind this project — offers open-core features and enterprise support. Over the past five years, the project has scored over 21,000 stars on GitHub (and even 30,000 if we sum up all the repos from this organization on GitHub).

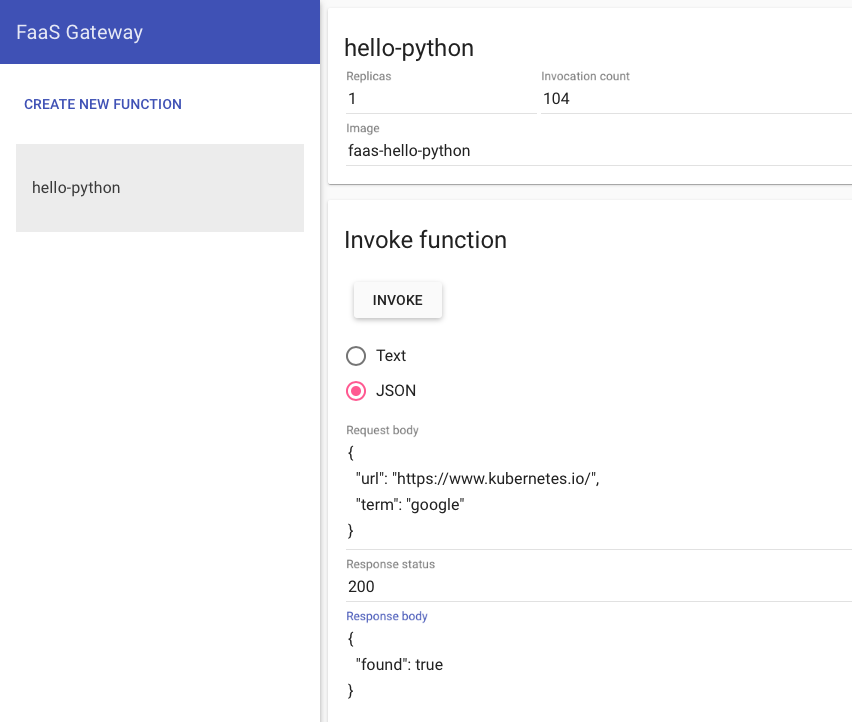

You can manage OpenFaaS via its faas-cli tool and over the web interface. The CLI can be used to manage OpenFaaS as well as to work with functions repositories (create/modify your own or use public ones).

The faas-netes package enables Kubernetes for OpenFaaS. It is an OpenFaaS provider that provides for the interaction between OpenFaaS and the cluster itself. The faas-netes module can also operate in operator mode, handling its own set of CRDs called Functions. If operator mode is disabled, OpenFaaS uses standard Kubernetes objects, such as Service and Deployment.

Features and advantages:

- Supports various programming languages. HTTP and stdio interfaces are available;

- Autoscaling – Prometheus (collects metrics) and Alertmanager (provides autoscaling based on collected metrics) handle this one. Can autoscale down to zero (subject to some reservations – more on that below);

- Supports Kubernetes (containerd can be an option via faasd);

- Supports Ingress at the front-end.

Limitations:

- OpenFaaS recommends at least one function replica be available for functions that need a fast response time. Scale to zero is also available but will involve a cold start.

The list of OpenFaaS users includes well-known companies, such as VMware, DigitalOcean, Citrix, and more.

Knative

Google originally developed it in collaboration with IBM, Red Hat, VMware, and SAP. Since October 2020, Knative has been managed by the community. The project is developing rapidly: now boasting more than 600 contributors.

There seems to be a growing interest in Knative among the big vendors. For example, Red Hat has integrated Knative support into OpenShift. In November 2021, Google asked CNCF to include Knative in the list of CNCF incubator projects. Still, any decision is yet to be made (there is an interesting article about the reasoning behind this case).

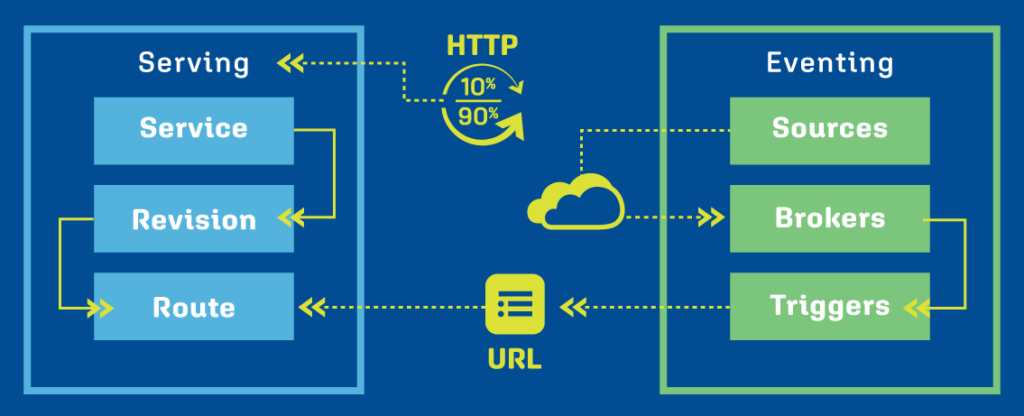

Knative is managed via the kn CLI. Using it, you can manage the project’s CRDs: Serving (continuously running services) and Eventing (event-driven services).

In the cluster, the Knative operator is responsible for running the framework. It uses Serving and Eventing to create the necessary Kubernetes objects, such as Pods.

Features and advantages:

- Can autoscale down to zero right out of the box. The developers claim to have solved the cold start problem: functions are invoked within 2 secs of the request’s arrival;

- Supports various programming languages: Node, Java, Python, Ruby, C#, PHP, and Go. Provides ready-made templates, so you don’t have to create a Dockerfile manually;

- Has its own set of metrics and logs;

- Can send event notifications to Slack, GitHub, etc;

- Can control the resources that functions consume;

- Supports both continuously running services and event-driven ones;

- Knative is compatible with Google Cloud Run, so it should be easy to migrate if you need it.

Limitations:

- Complicated autoscaling logic;

- Focuses on Kubernetes only;

- Requires Istio as an ingress gateway (which somewhat complicates things). You can ditch from Istio, however, the authors recommend using it.

Notable Knative users include Puppet, deepc, and Outfit7.

OpenWhisk

This framework was initially developed by IBM. It is now part of the Apache Software Foundation. Just like OpenFaaS, the project’s development has slowed down. At the same time, IBM offers its own serverless platform called IBM Cloud Functions, which is based on the foundation of the OpenWhisk project. According to the CNCF 2021 report mentioned above, more than 15% of respondents choose IBM Functions – almost twice as many as in 2020 (8%).

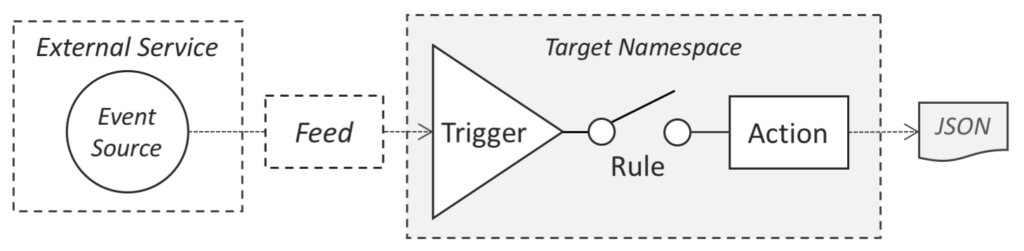

The wsk Command Line Interface (CLI) allows the user to create, run, and manage OpenWhisk entities.

Features and advantages:

- Supports Kubernetes, Docker Swarm, and Apache Mesos;

- Supports Go, Java, NodeJS, .NET, PHP, Python, Ruby, Rust, Scala, and Swift. Can run custom containers;

- Autoscaling;

- Collects metrics and logs;

- There is a ready-made REST API available;

- Integrates with Slack (via plugin);

- Can control the resources functions consume.

Limitations:

- OpenWhisk is prone to the cold start problem (although autoscaling is supported);

- OpenWhisk in Kubernetes requires Docker to work, but it’s anyone’s guess what it will look like when Docker support gets removed.

Fission

This 2018 framework is the brainchild of the Platform9 cloud service that supports it to this day. The GitHub README for the project lists DigitalOcean and InfraCloud as sponsors, but I could not find any specific mentions of this. It looks like Platform9 itself is the most devoted user of the framework. Overall, there’s not much information on Fission (I wonder if this is the reason or a consequence of its small number of users).

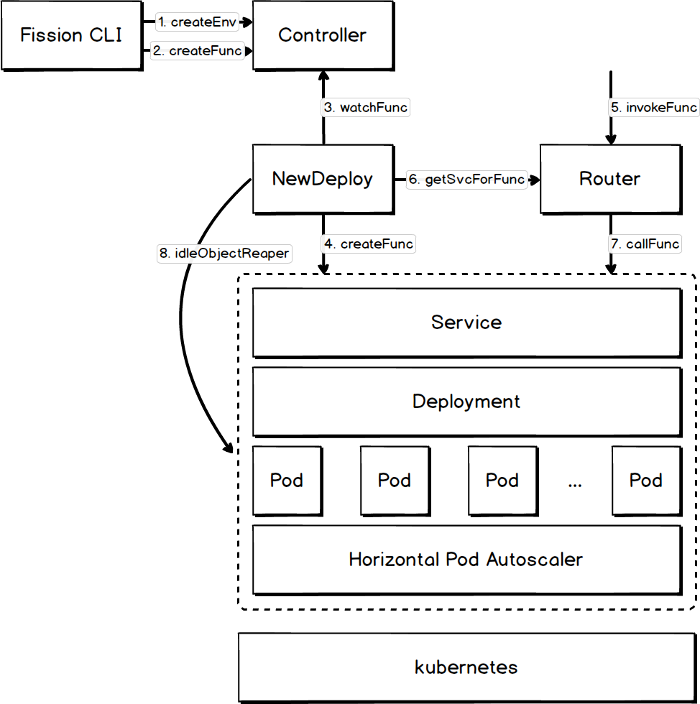

The Fission CLI helps the user to operate the framework. It has no unique CRDs and uses the standard Kubernetes primitives instead.

Features and advantages:

- Supports Node.js, Python, Ruby, Go, PHP, and Bash. Can run custom containers;

- Autoscaling;

- Collects metrics and logs;

- Supports WebHooks out of the box;

- The NewDeploy engine addresses the cold starts issue (according to the developers, the start time has been reduced to 100 ms).

Limitations:

- Focuses on Kubernetes only;

- Requires Istio as an ingress gateway (which somewhat complicates things);

- Currently, only CPU-based autoscaling is supported (the developers plan to add in custom metrics later);

- Cannot control the resources functions consume.

A bit of experience

One of our clients uses a self-hosted serverless framework at the moment. This company specializes in virtual assistants and uses OpenFaaS.

They chose OpenFaaS because it speeds up deployment, making life easier for the developer. However, they use OpenFaaS in situations where they need to implement some API function quickly, process an external source, fulfill some partner request, etc. There is no plan to port key functionality to OpenFaaS, though.

They point out the following drawbacks:

- OpenFaaS authorization cannot separate rights, so in some cases, two authorizations are performed: one when accessing OpenFaaS and another in the function itself.

- On several occasions, the client experienced problems due to OpenFaaS’s inability to control resources: one of the functions used up all the RAM on the node. This problem seemed not that often/critical to make a deeper investigation.

In summary, our client admits OpenFaaS is not currently used at full capacity, but it makes developers’ lives a little easier in some ways (and they are happy with that).

Conclusion

What’s ahead for serverless? Well, the approach is alive and kicking (thanks in part to the efforts of the CNCF Serverless Working Group). Interestingly enough, the aforementioned CNCF report shows that the percentage of serverless developers has dropped from 27% in 2020 to 24% in 2021. The report’s authors believe this was due to concerns they had about vendor lock-ins and FaaS limitations in general.

The wider community either has not yet come to appreciate self-hosted solutions, or the latter has turned out to be too niche-oriented to enter the mainstream.

Briefly about the frameworks discussed above:

- OpenFaaS has a rich history and quite an impressive list of adopters, rendering it a mature option.

- Fission evolves mainly thanks to the enthusiasm of its creators.

- OpenWhisk seems to move more by inertia.

- Knative lives off Google’s contributions and closer integration with K8s. Over time, the project’s attractiveness to prominent vendors has increased, so it has quite a promising future.

Apache OpenWhisk got 2.0 update a couple of months ago

Awesome to see that, thanks for letting us know!

The changelog suggests it is a significant update for OpenWhisk, indeed. Particularly, it enables the new scheduler by default.