Vault is an Open Source solution by HashiCorp for managing secrets. Its built-in modularity and scalability allow you both to run a small development server on your laptop and operate a fully-fledged HA-cluster intended for production environments.

While preparing to use Vault, we asked ourselves two key questions:

- What backend (i.e., a place where secrets are stored physically; it can be a local file system or a solution based on some relational database or other storage types) should we use? What backend do the developers suggest?

- What performance does the chosen architecture have, and how much load can it handle?

The answer to the first question seems to be simple: HashiCorp recommends Consul as the only proper choice. And that is not surprising given that both projects have the same authors… The second question is more complicated, though. But why did they bother us at all?

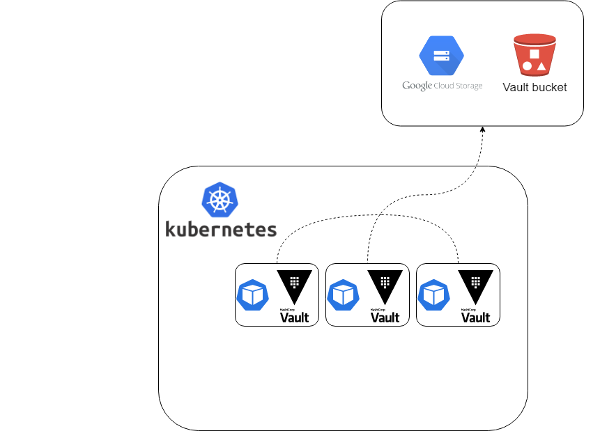

In the application where Vault is actively used, we initially started deploying it with Consul, relying on the default option. Soon, we discovered that the usage of Consul and its maintenance brings a significant overhead: dozens of repositories, dozens of environments, multiple maintenance tasks (updates, reconfiguration, etc.). We discussed the situation with colleagues and decided to make our lives easier by migrating to GCS (Google Cloud Storage). This way, we can completely free ourselves from the challenges of maintaining the self-hosted backend solution.

However, over time we realized one thing that we did not consider: backends greatly vary in their load handling. While GCS could handle the mild development-originated traffic, it could not cope with the traffic coming during mass-testing or demo runs in front of our customers. It naturally made us wonder what would happen in production. Given the problem we have encountered, we need to perform load testing of various backends and examine the results.

Many parameters affect the performance of Vault: network latency, the backend type, the number of nodes in the Vault cluster, their load, etc. The results considerably vary when you modify even one parameter. In this article, we will analyze only a part of these factors — the “basic” (i.e., non-optimized) performance of various backends.

The good news is that many repositories containing ready-made scripts for testing are available in Git. If you want to test using the existing Vault installation, we suggest you use, for example, Vault Benchmarking Scripts or Load Tests for Vault. Another option is to use my testing method based on HashiCorp scripts — continue reading to see how it works.

Preparing for testing

Requirements

To perform the tests described below, we need:

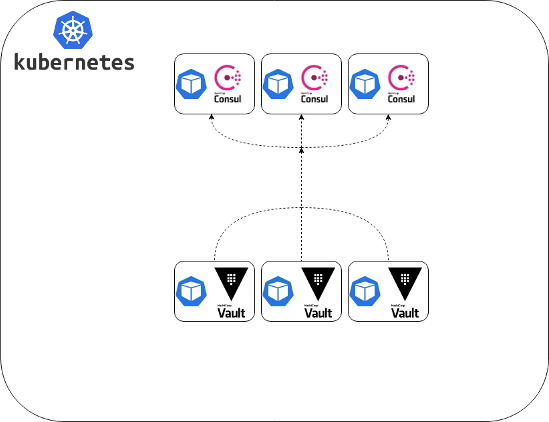

- The Vault manager running in HA mode. I am going to use a separate Kubernetes cluster to deploy three Vault clusters based on different backends.

- A separate server for running test scripts. In our case, there is an independent server next to the K8s cluster. Testing is performed over a local network to minimize network latency.

Participants

We will be testing three popular backends:

- Consul;

- PostgreSQL;

- GCS (Google Cloud Storage).

The HA support is the key criterion for selecting candidates for testing (that is why we have included GCS in the list but not AWS S3). Also, we tried to include conceptually diverse backends in the list to make the comparison more interesting and illustrative.

The complete list of supported backends is available in Vault’s documentation. Using the algorithm outlined below, you can quickly test any other backends of your choice.

Action plan

How is the benchmarking performed?

- Writing 10 000 secrets via several threads. There is no strong rationale for this number: we picked it so that the work volume is substantial for the backend.

- Reading secrets in the same way.

- Measuring the performance of each backend.

We will be using the wrk load testing tool. It is used in the benchmarking set developed by HashiCorp itself.

Benchmarking the performance

1. Consul

First, let’s test Vault with the Consul backend. There are two tests (according to the plan): for writing and reading secrets.

Start the Consul cluster consisting of three nodes and set it as a backend for Vault running on three nodes as well.

And we’re ready to go!

# Prepare the environment variables:

export VAULT_ADDR=https://vault.service.consul:8200

export VAULT_TOKEN=YOUR_ROOT_TOKEN

# Enable Vault authorization for easy testing

vault auth enable userpass

vault write auth/userpass/users/loadtester password=benchmark policies=default

# Since Vault is not running in dev mode,

# the `secret` path must be empty right now

vault secrets enable -path secret -version 1 kv

git clone https://github.com/hashicorp/vault-guides.git

cd vault-guides/operations/benchmarking/wrk-core-vault-operations/

# We will simultaneously record random secrets using:

# 6 threads

# 16 connections per thread

# 30 seconds to complete the test

# 10000 secrets to be written

nohup wrk -t6 -c16 -d30s -H "X-Vault-Token: $VAULT_TOKEN" -s write-random-secrets.lua $VAULT_ADDR -- 10000 > prod-test-write-1000-random-secrets-t6-c16-30sec.log &Here is what we’ve got for writing:

Number of secrets is: 10000

thread 1 created

Number of secrets is: 10000

thread 2 created

Number of secrets is: 10000

thread 3 created

Number of secrets is: 10000

thread 4 created

Number of secrets is: 10000

thread 5 created

Number of secrets is: 10000

thread 6 created

Running 30s test @ http://vault:8200

6 threads and 16 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 134.96ms 68.89ms 558.62ms 84.21%

Req/Sec 16.31 6.62 50.00 87.82%

2685 requests in 30.04s, 317.27KB read

Requests/sec: 89.39

Transfer/sec: 10.56KB

thread 1 made 446 requests including 446 writes and got 443 responses

thread 2 made 447 requests including 447 writes and got 445 responses

thread 3 made 447 requests including 447 writes and got 445 responses

thread 4 made 459 requests including 459 writes and got 457 responses

thread 5 made 450 requests including 450 writes and got 448 responses

thread 6 made 449 requests including 449 writes and got 447 responsesNow it is time to perform the reading test. Let’s write 1000 secrets to the storage and then read them using the same approach as the one we used for writing.

# Writing 1000 secrets

wrk -t1 -c1 -d5m -H "X-Vault-Token: $VAULT_TOKEN" -s write-secrets.lua $VAULT_ADDR -- 1000

# Checking if the 1000th secret is created:

vault read secret/read-test/secret-1000

Key Value

--- -----

refresh_interval 768h

extra 1xxxxxxxxx2xxxxxxxxx3xxxxxxxxx4xxxxxxxxx5xxxxxxxxx6xxxxxxxxx7xxxxxxxxx8xxxxxxxxx9xxxxxxxxx0xxxxxxxxx

thread-1 write-1000

# Trying to read 1000 secrets in 4 threads simultaneously

nohup wrk -t4 -c16 -d30s -H "X-Vault-Token: $VAULT_TOKEN" -s read-secrets.lua $VAULT_ADDR -- 1000 false > prod-test-read-1000-random-secrets-t4-c16-30s.log &Here is the result for reading:

Number of secrets is: 1000

thread 1 created with print_secrets set to false

Number of secrets is: 1000

thread 2 created with print_secrets set to false

Number of secrets is: 1000

thread 3 created with print_secrets set to false

Number of secrets is: 1000

thread 4 created with print_secrets set to false

Running 30s test @ http://vault:8200

4 threads and 16 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 2.25ms 2.88ms 54.18ms 88.77%

Req/Sec 2.64k 622.04 4.81k 65.91%

315079 requests in 30.06s, 130.08MB read

Requests/sec: 10483.06

Transfer/sec: 4.33MB

thread 1 made 79705 requests including 79705 reads and got 79700 responses

thread 2 made 79057 requests including 79057 reads and got 79053 responses

thread 3 made 78584 requests including 78584 reads and got 78581 responses

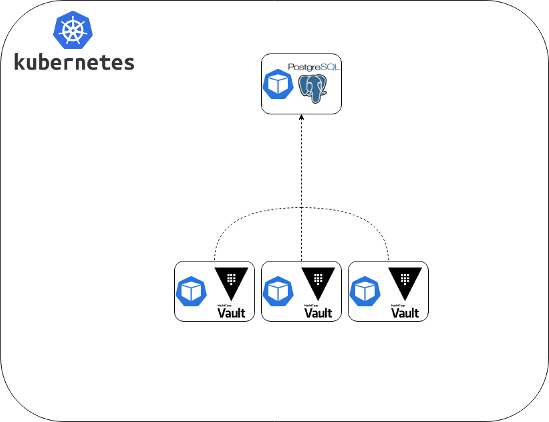

thread 4 made 77748 requests including 77748 reads and got 77745 responsess2. PostgreSQL

Now let’s perform a similar test using PostgreSQL 11.7.0 as a backend. SSL is disabled; all settings are at default values.

Here is the result of writing:

Number of secrets is: 10000

thread 1 created

Number of secrets is: 10000

thread 2 created

Number of secrets is: 10000

thread 3 created

Number of secrets is: 10000

thread 4 created

Number of secrets is: 10000

thread 5 created

Number of secrets is: 10000

thread 6 created

Running 30s test @ http://vault:8200

6 threads and 16 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 33.02ms 14.74ms 120.97ms 67.81%

Req/Sec 60.70 15.02 121.00 69.44%

10927 requests in 30.03s, 1.26MB read

Requests/sec: 363.88

Transfer/sec: 43.00KB

thread 1 made 1826 requests including 1826 writes and got 1823 responses

thread 2 made 1797 requests including 1797 writes and got 1796 responses

thread 3 made 1833 requests including 1833 writes and got 1831 responses

thread 4 made 1832 requests including 1832 writes and got 1830 responses

thread 5 made 1801 requests including 1801 writes and got 1799 responses

thread 6 made 1850 requests including 1850 writes and got 1848 responsesThe same for reading:

Number of secrets is: 1000

thread 1 created with print_secrets set to false

Number of secrets is: 1000

thread 2 created with print_secrets set to false

Number of secrets is: 1000

thread 3 created with print_secrets set to false

Number of secrets is: 1000

thread 4 created with print_secrets set to false

Running 30s test @ http://vault:8200

4 threads and 16 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 2.48ms 2.93ms 71.93ms 89.76%

Req/Sec 2.11k 495.44 3.01k 65.67%

251861 requests in 30.02s, 103.98MB read

Requests/sec: 8390.15

Transfer/sec: 3.46MB

thread 1 made 62957 requests including 62957 reads and got 62952 responses

thread 2 made 62593 requests including 62593 reads and got 62590 responses

thread 3 made 63074 requests including 63074 reads and got 63070 responses

thread 4 made 63252 requests including 63252 reads and got 63249 responses3. GCS

Now, let’s run the same set of tests using GCS as a backend (the settings are also at default values):

Writing:

Number of secrets is: 10000

thread 1 created

Number of secrets is: 10000

thread 2 created

Number of secrets is: 10000

thread 3 created

Number of secrets is: 10000

thread 4 created

Number of secrets is: 10000

thread 5 created

Number of secrets is: 10000

thread 6 created

Running 30s test @ http://vault:8200

6 threads and 16 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 209.08ms 42.58ms 654.51ms 91.96%

Req/Sec 9.89 2.45 20.00 87.21%

1713 requests in 30.04s, 202.42KB read

Requests/sec: 57.03

Transfer/sec: 6.74KB

thread 1 made 292 requests including 292 writes and got 289 responses

thread 2 made 286 requests including 286 writes and got 284 responses

thread 3 made 284 requests including 284 writes and got 282 responses

thread 4 made 289 requests including 289 writes and got 287 responses

thread 5 made 287 requests including 287 writes and got 285 responses

thread 6 made 288 requests including 288 writes and got 286 responsesReading:

Number of secrets is: 1000

thread 1 created with print_secrets set to false

Number of secrets is: 1000

thread 2 created with print_secrets set to false

Number of secrets is: 1000

thread 3 created with print_secrets set to false

Number of secrets is: 1000

thread 4 created with print_secrets set to false

Running 30s test @ http://vault:8200

4 threads and 16 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 4.40ms 1.72ms 53.59ms 92.11%

Req/Sec 0.93k 121.29 1.19k 71.83%

111196 requests in 30.02s, 45.91MB read

Requests/sec: 3704.27

Transfer/sec: 1.53MB

thread 1 made 31344 requests including 31344 reads and got 31339 responses

thread 2 made 26639 requests including 26639 reads and got 26635 responses

thread 3 made 25690 requests including 25690 reads and got 25686 responses

thread 4 made 27540 requests including 27540 reads and got 27536 responsesSummary of results

| Consul | PostgreSQL | GCS | |

| write (RPS/thread) | 16.31 | 60.70 | 9.89 |

| write (total) | 2685 | 10927 | 1713 |

| read (RPS/thread) | 2640 | 2110 | 930 |

| read (total) | 315079 | 251861 | 111196 |

In the table, you can see the number of secrets:

- written per second per thread;

- written in total (in all threads) in 30 seconds;

- read per second per thread;

- read in total (in all threads) in 30 seconds.

So, if we actually compare these options:

- It turns out, PostgreSQL is best for writing. It is 4 times more performant than Consul and ten times more performant than GCS.

- As for the reading, Consul is the obvious leader here.

And more general conclusions will be that:

- PostgreSQL is recommended if you anticipate that the number of writes will be high. In all other cases, Consul fits just perfectly (plus, HashiCorp recommends it).

- In our experience, GCS is the most convenient if you do not want to deploy and maintain the backend yourselves. Also, it provides the so-called Auto Unseal and a built-in service for creating backups (there are some peculiarities, but we will discuss them in a separate article).

This specific comparison is not a goal in itself

I hope the testing methodology described above can help you determine the performance of various Vault backends and run your own tests.

The numbers we’ve got let you get a basic idea of what load can handle the out-of-the-box Vault (without fine-tuning and optimizing). Judging by the results, the performance of the backends themselves was the limiting factor, that is why there was no need to perform additional testing (e.g., using a different number of threads). However, it can be easily done if needed.

During testing, we’ve been using the $VAULT_TOKEN token without including the authorization process in the results. The results will vary depending on the different parameters, such as:

- the number of concurrent clients;

- the authentication method in use;

- the frequency of client authentication;

- the type of secrets used;

- the origin of the requests (local or global network);

- …

Conclusion

Consider our tests as the basis for conducting your own experiments. The internal testing similar to the one we performed above will help you get a more accurate idea of the Vault behavior under loads specific to your use cases. You may need to make changes in the configuration of backends (not to mention switching to another backend), modify Lua scripts and their call parameters.

In our case, we ended up using Consul as the backend that handles our project’s loads most optimally. Moreover, it is recommended by Vault developers. Its maintenance is still associated with noticeable overhead, though. However, there can be no compromise when it comes to stability issues: you have to do whatever you can to ensure that your configuration meets business requirements.

Comments