When I heard that one famous company moving its laptops to ARM processors, I recalled that when I looked through AWS EC2 offerings, I noted that Gravitons processors are very attractively priced. The reason for this is apparent: they are based on the ARM architecture. At the time, I could not imagine that ARM would be a big player here…

For me, this architecture has always been associated with mobile and IoT devices. A fully-featured ARM-based server… sounds weird, right? Still, the new idea stuck in my head, and one weekend I’ve decided to try it and see what can be run on ARM nowadays. So I thought I would start with something well-known and familiar — a Kubernetes cluster! And not with “some cluster” but a real, production-grade one!

By this I mean this cluster should be exposed to the Internet, run some web applications, and at least have monitoring tools. To put this idea in practice, you will need two (or more) Raspberry Pis model 3B+ or newer. Of course, I could use AWS for experimenting, but I wanted to put Raspberry Pis (which I’ve already had) to good use. Well, dictum factum! We will deploy/set up a vanilla Kubernetes cluster that includes Ingress, Prometheus, and Grafana.

Setting up Raspberry Pis

Installing OS and SSH

I did not bother much with choosing the “best” OS, and just used the latest Raspberry Pi OS Lite distribution available at the official site. There you can also find the installation documentation containing a list of actions to be performed on all nodes of the future cluster. Next, you will need to do the following for all of your nodes.

Connect the monitor/keyboard and configure the network and the SSH server:

- For the cluster to work, the master node must have a static IP address. You can also assign static addresses to worker nodes (this is optional). I used static addresses in both cases to make configuring easier.

- You can configure a static address in the OS (an example is available in the

/etc/dhcpcd.conf) or by making a permanent DHCP lease in the router settings (in my case it was the home router). - You can enable the SSH server via

raspi-config(interfacing options → ssh).

After that, you can log in via SSH (the default username is pi, and the password is raspberry or the one you have provided) and keep on configuring.

Other settings

1 .Let’s set the host names. In my case, I refer to the nodes as pi-control and pi-worker.

2. Now it is time to check if the filesystem is expanded to the whole SD card (df -h /). You can expand it using raspi-config if needed.

3. Let’s change the default user password in raspi-config.

4. Do not forget to disable swap (this is required by Kubernetes; see issue #53533 for more details):

dphys-swapfile swapoffsystemctl disable dphys-swapfile5. Update packages to the latest versions:

apt-get update && apt-get dist-upgrade -y6. Install Docker and additional packages:

apt-get install -y docker docker.io apt-transport-https curl bridge-utils iptables-persistentWhen installing iptables-persistent, you’ll need to save your iptables settings for IPv4 and add few rules to the FORWARD chain of your /etc/iptables/rules.v4 file, like this:

# Generated by xtables-save v1.8.2 on Sun Jul 19 00:27:43 2020

*filter

:INPUT ACCEPT [0:0]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [0:0]

-A FORWARD -s 10.1.0.0/16 -j ACCEPT

-A FORWARD -d 10.1.0.0/16 -j ACCEPT

COMMIT7. Reboot your Raspberry Pi.

Now you are ready to install the Kubernetes cluster.

Installing Kubernetes

At this stage, I deliberately put aside all my own as well as corporate practices and tools for automating the installation/configuration of the K8s cluster. Instead, I will use the official documentation available on kubernetes.io (in a slightly stripped-down form and supplemented with my comments).

Let’s add the Kubernetes repository:

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

cat <<EOF | sudo tee /etc/apt/sources.list.d/kubernetes.list

deb https://apt.kubernetes.io/ kubernetes-xenial main

EOF

sudo apt-get updateNext, the documentation suggests installing the CRI (container runtime interface). Since Docker is already installed, we proceed to the next step and install the main components:

sudo apt-get install -y kubelet kubeadm kubectl kubernetes-cniAt this step, I also installed kubernetes-cni — it is required for the cluster to work. Please note that kubernetes-cni does not create a default directory for CNI interface settings for some reason, so you have to create it manually:

mkdir -p /etc/cni/net.dFor the network backend to be able to function (we will discuss it below), you need to install the CNI plugin. I settled on a familiar and well-known portmap plugin (a full list of plugins is available in the docs):

curl -sL https://github.com/containernetworking/plugins/releases/download/v0.7.5/cni-plugins-arm-v0.7.5.tgz | tar zxvf - -C /opt/cni/bin/ ./portmapConfiguring Kubernetes

The control-plane node

The process of installing the cluster is quite simple. Execute this command to speed up it and check if the Kubernetes images are available:

kubeadm config images pullAnd now, to the installation itself. Let’s initialize the cluster’s control plane:

kubeadm init --pod-network-cidr=10.1.0.0/16 --service-cidr=10.2.0.0/16 --upload-certsNote that subnets for services and pods must not overlap with each other or with existing networks.

You will see the following message after the initialization is complete. It contains useful information on how to connect worker nodes to the control plane:

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.88.30:6443 --token a485vl.xjgvzzr2g0xbtbs4 \

--discovery-token-ca-cert-hash sha256:9da6b05aaa5364a9ec59adcc67b3988b9c1b94c15e81300560220acb1779b050 \

--contrl-plane --certificate-key 72a3c0a14c627d6d7fdade1f4c8d7a41b0fac31b1faf0d8fdf9678d74d7d2403

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.88.30:6443 --token a485vl.xjgvzzr2g0xbtbs4 \

--discovery-token-ca-cert-hash sha256:9da6b05aaa5364a9ec59adcc67b3988b9c1b94c15e81300560220acb1779b050Let’s add the user config. I also recommend you to enable the auto-completion for kubectl:

kubectl completion bash > ~/.kube/completion.bash.inc

printf "

# Kubectl shell completion

source '$HOME/.kube/completion.bash.inc'

" >> $HOME/.bash_profile

source $HOME/.bash_profileAt this stage, you can already see the first node in the cluster (although it is NotReady yet):

root@pi-control:~# kubectl get no

NAME STATUS ROLES AGE VERSION

pi-control NotReady master 29s v1.18.6Configuring the network

Next, as the message concluding the installation states, you will need to install the network into the cluster. Authors of the documentation suggest a choice of Calico, Cilium, contiv-vpp, Kube-router, and Weave Net. At this step, I put aside the formal instructions, preferring the more familiar option: flannel in the host-gw mode (for more information about available backends please check the project documentation).

You can install it into the cluster quite easily. The first step is to download manifests:

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.ymlThen you have to replace vxlan with host-gw in the settings:

sed -i 's/vxlan/host-gw/' kube-flannel.yml… and edit the pod subnet value with the one you specified during the cluster initialization:

sed -i 's#10.244.0.0/16#10.1.0.0/16#' kube-flannel.ymlNow it is time to create resources:

kubectl create -f kube-flannel.ymlDone! Shortly thereafter, the first K8s node would switch to the Ready status:

NAME STATUS ROLES AGE VERSION

pi-control Ready master 2m v1.18.6Adding a worker

It is time to add a worker node. First, you have to install Kubernetes there using the scenario provided above. Then just execute the command from the message that concludes the initialization:

kubeadm join 192.168.88.30:6443 --token a485vl.xjgvzzr2g0xbtbs4 \

--discovery-token-ca-cert-hash sha256:9da6b05aaa5364a9ec59adcc67b3988b9c1b94c15e81300560220acb1779b050At this point, we can assume that the cluster is ready:

root@pi-control:~# kubectl get no

NAME STATUS ROLES AGE VERSION

pi-control Ready master 28m v1.18.6

pi-worker Ready <none> 2m8s v1.18.6Well, I had only two Raspberry Pis at my disposal, so I did not want to commit one of them to a control plane solely. That is why I unset the taint automatically applied to the pi-control node by running the following command:

root@pi-control:~# kubectl edit node pi-control… and then deleting the lines:

- effect: NoSchedule

key: node-role.kubernetes.io/masterFilling the cluster with essential tools

First of all, we need Helm. Of course, you can get along just fine without it. But still, Helm makes your life easier allowing you to configure some components literally without editing their files. And in the end, that is simply a binary file that does not require you to do anything.

Installation instructions are available in the docs/into/install section on the Helm site:

curl -s https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 | bashThen you have to add charts repositories:

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm repo add grafana https://grafana.github.io/helm-charts

helm repo add infobloxopen https://infobloxopen.github.io/cert-manager/Let’s install infrastructure components as per the original plan:

- Ingress controller;

- Prometheus;

- Grafana;

- cert-manager.

Ingress controller

The installation of the Ingress controller is quite simple and straightforward, and it is ready to use right out of the box. To do this, go to the bare-metal section on the NGINX Ingress Controller website and run the installation command provided there:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.34.1/deploy/static/provider/baremetal/deploy.yamlAt this point, my Raspberry Pi started running slow because of low IOPS performance of the memory card. The thing is many resources are installed simultaneously with the Ingress controller, a lot of requests are made, and, consequently, large amounts of data are written to etcd. It turns out that either the performance of the Class 10 card is not enough, or SD cards are not suited for such tasks in principle. Anyway, after about five minutes everything fired up.

As you can see, there is a namespace, nginx-controller, and all the necessary tools:

root@pi-control:~# kubectl -n ingress-nginx get pod

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-2hwdx 0/1 Completed 0 31s

ingress-nginx-admission-patch-cp55c 0/1 Completed 0 31s

ingress-nginx-controller-7fd7d8df56-68qp5 1/1 Running 0 48sPrometheus

The next two components are easy to install using Helm and a chart repository.

Let’s create a namespace for Prometheus and install the chart into it:

kubectl create ns monitoring

helm install prometheus --namespace monitoring stable/prometheus --set server.ingress.enabled=True --set server.ingress.hosts={"prometheus.home.pi"}Prometheus requests two disks by default: for Prometheus data and AlertManager data. Since our cluster does not have storage class defined, disks will not be allocated, and pods will not start. Normally, we use Ceph rbd for our bare-metal Kubernetes installations. However, this is an obvious overkill for the cluster running on Raspberry Pis…

So, let us define the basic hostpath-based local storage. You can find persistent volume manifests for prometheus-server and prometheus-alertmanager in the prometheus-pv.yaml file in the corresponding Git repository. Please note that you have to create a PV directory on the disk of the node where Prometheus will be run beforehand. In our example, nodeAffinity rule says the Prometheus pod can only be placed on pi-worker, and /data/localstorage/prometheus-server and /data/localstorage/prometheus-alertmanager directories already exist on that node.

Download (git clone) the manifest and add it to Kubernetes:

kubectl create -f prometheus-pv.yamlAt this stage, I encountered the first problem of the ARM architecture. The kube-state-metrics service, which is installed by default in the Prometheus chart, failed to run. It printed the following error:

root@pi-control:~# kubectl -n monitoring logs prometheus-kube-state-metrics-c65b87574-l66d8

standard_init_linux.go:207: exec user process caused "exec format error"The thing is that kube-state-metrics uses an image by the CoreOS project, and these images are not built for ARM:

kubectl -n monitoring get deployments.apps prometheus-kube-state-metrics -o=jsonpath={.spec.template.spec.containers[].image}

quay.io/coreos/kube-state-metrics:v1.9.7So, I had to do a little googling to find a suitable image. Let’s update the release while specifying what kube-state-metrics image to use:

helm upgrade prometheus --namespace monitoring stable/prometheus --set server.ingress.enabled=True --set server.ingress.hosts={"prometheus.home.pi"} --set kube-state-metrics.image.repository=carlosedp/kube-state-metrics --set kube-state-metrics.image.tag=v1.9.6And now let’s check if it is running properly:

root@pi-control:~# kubectl -n monitoring get po

NAME READY STATUS RESTARTS AGE

prometheus-alertmanager-df65d99d4-6d27g 2/2 Running 0 5m56s

prometheus-kube-state-metrics-5dc5fd89c6-ztmqr 1/1 Running 0 5m56s

prometheus-node-exporter-49zll 1/1 Running 0 5m51s

prometheus-node-exporter-vwl44 1/1 Running 0 4m20s

prometheus-pushgateway-c547cfc87-k28qx 1/1 Running 0 5m56s

prometheus-server-85666fd794-z9qnc 2/2 Running 0 4m52sPerfect!

Grafana and cert-manager

Grafana is an obvious choice for visualizations and relevant dashboards. Let’s install it:

helm install grafana --namespace monitoring stable/grafana --set ingress.enabled=true --set ingress.hosts={"grafana.home.pi"}The final message contains information on how to get a password for access:

kubectl get secret --namespace monitoring grafana -o jsonpath="{.data.admin-password}" | base64 --decode ; echoCert-manager allows you to issue certificates. To install it, refer to the documentation (it includes commands suitable for Helm):

helm repo add jetstack https://charts.jetstack.io

helm install \

cert-manager jetstack/cert-manager \

--namespace cert-manager \

--version v0.16.0 \

--set installCRDs=trueThat is sufficient to issue self-signed certificates for home use. If there is a need to issue Let’s Encrypt certificates, then you have to configure a cluster issuer as well. You can learn more about this in our “Using SSL certificates from Let’s Encrypt in your Kubernetes Ingress via cert-manager” article.

I decided to follow this example since the staging version of LE is sufficient in my case. Enter your own email address, save the configuration to a file (cert-manager-cluster-issuer.yaml), and add it to the cluster:

kubectl create -f cert-manager-cluster-issuer.yamlNow you can request a certificate for, say, Grafana. This requires a domain and access to the cluster from outside. Well, I did have a domain, and I configured traffic routes by forwarding ports 80 and 443 on my home router using the configuration of the ingress-controller service:

kubectl -n ingress-nginx get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.2.206.61 <none> 80:31303/TCP,443:30498/TCP 23dIn this case, port 80 maps to 31303, and port 443 maps to 30498 (the internal ports are randomly generated so they will be different in your case).

Here is an example of a certificate (cert-manager-grafana-certificate.yaml):

apiVersion: cert-manager.io/v1alpha2

kind: Certificate

metadata:

name: grafana

namespace: monitoring

spec:

dnsNames:

- grafana.home.pi

secretName: grafana-tls

issuerRef:

kind: ClusterIssuer

name: letsencrypt-stagingAdding it to the cluster:

kubectl create -f cert-manager-grafana-certificate.yamlThis command creates an Ingress resource that is used to validate Let’s Encrypt certificates:

root@pi-control:~# kubectl -n monitoring get ing

NAME CLASS HOSTS ADDRESS PORTS AGE

cm-acme-http-solver-rkf8l <none> grafana.home.pi 192.168.88.31 80 72s

grafana <none> grafana.home.pi 192.168.88.31 80 6d17h

prometheus-server <none> prometheus.home.pi 192.168.88.31 80 8dAfter the validation is complete, you will see that the certificate resource is ready, and the grafana-tls secret contains a certificate and key. You can immediately check the issuer of the certificate:

root@pi-control:~# kubectl -n monitoring get certificate

NAME READY SECRET AGE

grafana True grafana-tls 13m

root@pi-control:~# kubectl -n monitoring get secrets grafana-tls -ojsonpath="{.data['tls\.crt']}" | base64 -d | openssl x509 -issuer -noout

issuer=CN = Fake LE Intermediate X1But let’s get back to Grafana. We need to modify its Helm chart by editing TLS settings using the certificate created above.

To do this, download the chart, edit it, and perform an update using the local directory:

helm pull --untar stable/grafanaEdit TLS parameters in grafana/values.yaml:

tls:

- secretName: grafana-tls

hosts:

- grafana.home.piAlso, you can use this opportunity to set your Prometheus as a datasource:

datasources:

datasources.yaml:

apiVersion: 1

datasources:

- name: Prometheus

type: prometheus

url: http://prometheus-server:80

access: proxy

isDefault: trueNow, perform an update using the local directory as a source:

helm upgrade grafana --namespace monitoring ./grafana --set ingress.enabled=true --set ingress.hosts={"grafana.home.pi"}Check that port 443 has been added to the grafana Ingress, and there is access to it over HTTPS:

root@pi-control:~# kubectl -n monitoring get ing grafana

NAME CLASS HOSTS ADDRESS PORTS AGE

grafana <none> grafana.home.pi 192.168.88.31 80, 443 63m

root@pi-control:~# curl -kI https://grafana.home.pi

HTTP/2 302

server: nginx/1.19.1

date: Tue, 28 Jul 2020 19:01:31 GMT

content-type: text/html; charset=utf-8

cache-control: no-cache

expires: -1

location: /login

pragma: no-cache

set-cookie: redirect_to=%2F; Path=/; HttpOnly; SameSite=Lax

x-frame-options: deny

strict-transport-security: max-age=15724800; includeSubDomainsThe demo kube-state-metrics dashboard for Grafana is available here. Here’s what it looks like:

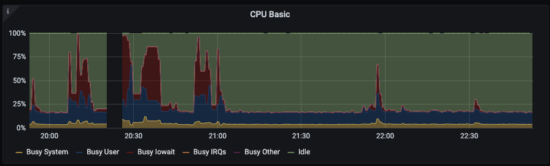

I also recommend you adding a node exporter dashboard. It is ideally suited for monitoring your Raspberry Pis’ state: CPU load, memory/network/disk usage, etc.

Now you have a fully-featured cluster ready to deploy and run your applications!

A few notes on building ARM images

There are at least two ways of building ARM-compatible applications. First, you can build them on the ARM device itself. However, considering the already high utilization of both of my Raspberry Pis, I concluded that they just do not have enough resources for the build process. So I bought a new Raspberry Pi 4 (which is more powerful and has 4 GB of memory) for building ARM images.

Second, you can build a multi-architecture Docker image using a more powerful machine. A docker buildx plugin can help you with this. If your application is written in a compiled language, then you need to cross-compile it for ARM. I will not dive into details here since this approach deserves an article of its own. With this approach, you can create one-fits-all images: Docker running on ARM would automatically pull the relevant, ARM-compatible image.

Conclusion

Well, the experiment has far exceeded my expectations: it looks like vanilla Kubernetes runs good on ARM machines, and its configuration involves just a couple of tricks.

It turns out that Raspberry Pis 3B+ have plenty of CPU resources, but their SD cards are a clear bottleneck. My colleagues suggested some Raspberry versions support booting from USB, and you can connect an SSD to it. It would improve the situation a lot.

Here is an example of the CPU load when installing Grafana:

Raspberry Pi-based Kubernetes cluster, in my opinion, is an excellent choice for experimenting and trying various approaches. I believe it perfectly conveys nuances of the cluster operation as compared to, say, Minikube since you have to install and run all components of the cluster the same way as in a real-life project.

Plus, I have an idea to implement the entire CI/CD in the cluster that is based exclusively on Raspberry Pis. Those who have the experience of setting up and running K8s on AWS Gravitons, please, share it in the comments below — I would appreciate it!

Yes, your “production” can be closer than you think!

Comments