Ever needed to move etcd storage from one K8s cluster to another? In the process, you will most likely have no option to turn it off, as it could lead to a minor (or major) collapse of the services that rely on it. This article discusses a not-so-obvious and not quite the most popular way to migrate etcd* from a Kubernetes cluster hosted in one cloud to another. The method outlined below can help you avoid downtime and the consequences that come with it. Both clusters are in the cloud, which means we are sure to encounter some limitations and difficulties (we will be discussing them in detail).

* We are not migrating etcd in which Kubernetes stores the whole cluster state. What we cover is a standalone etcd installation that is used by third-party applications and that runs in a K8s cluster.

So, there are two ways to migrate etcd:

- The most obvious one is to take an etcd snapshot and restore it at a new location. But that way involves downtime and therefore is not in the cards for us.

- The second method is to propagate etcd over two Kubernetes clusters. To do so, you have to create independent StatefulSets in each of the K8s clusters and then combine them into a single etcd cluster. This method comes with risks: an error could affect the existing etcd cluster. But that allows you to migrate etcd between clusters and avoid downtime. This is the method we will focus on below.

Note: In this article, we use AWS, but the process is almost identical for any other cloud provider. The K8s clusters in the examples below are managed using the Deckhouse Kubernetes platform. This means that some functionality may be platform-specific. For cases such as these, we will provide alternative ways of doing things.

We assume you have a basic understanding of etcd and have worked with this database before. We also recommend checking the official etcd documentation.

Step 1: Reducing the size of the etcd database

Note: If your etcd cluster has a limit on the number of revisions of each key, feel free to skip this section.

The first thing to consider before starting the migration is the etcd database size. A large database will increase the bootstrap time for new nodes and may potentially lead to problems. So let’s look at how you can reduce the database size.

Find out the current revision — get the list of keys:

# etcdctl get / --prefix --keys-only

/main_production/main/config

/main_production/main/failover

/main_production/main/history

…

Now let’s check a random key in the JSON format:

# etcdctl get /main_production/main/history -w=json

{"header":{"cluster_id":13812367153619139789,"member_id":7168735187350299418,"revision":5828757,..

You will see the current cluster revision (5828757 in our case).

Subtract the number of recent revisions you want to keep: in our experience, a thousand is sufficient. Run etcdctl compaction using the value you ended up with:

# etcdctl compaction 5827757This command is global to the entire etcd cluster — just run it once on any of the nodes. Please, refer to the official documentation for details on how compaction (and other etcdctl commands) works.

Next, defrag to free up space:

# etcdctl defrag --command-timeout=90sThis command must be run on each node sequentially. We recommend you repeat this step on all nodes except the leader and then switch it to the defragmented node using etcdctl move-leader. Then you can go back to the last node and reduce the database size on it.

In our case, the procedure reduced the database size from 800 MB to ~700 KB, significantly cutting down the time required for the subsequent steps.

The etcd chart to use

etcd runs as a StatefulSet. Below is an example of a StatefulSet used in a cluster:

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: etcd

labels:

app: etcd

spec:

serviceName: etcd

selector:

matchLabels:

app: etcd

replicas: 3

template:

metadata:

labels:

app: etcd

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In values:

- etcd

topologyKey: kubernetes.io/hostname

imagePullSecrets:

- name: registrysecret

containers:

- name: etcd

image: quay.io/coreos/etcd:v3.4.18

command:

- sh

args:

- -c

- |

stop_handler() {

>&2 echo "Caught SIGTERM signal!"

kill -TERM "$child"

}

trap stop_handler SIGTERM SIGINT

etcd \

--name=$HOSTNAME \

--initial-advertise-peer-urls=http://$HOSTNAME.etcd:2380 \

--initial-cluster-token=etcd-cortex-prod \

--initial-cluster etcd-0=http://etcd-0.etcd:2380,etcd-1=http://etcd-1.etcd:2380,etcd-2=http://etcd-2.etcd:2380 \

--advertise-client-urls=http://$HOSTNAME.etcd:2379 \

--listen-client-urls=http://0.0.0.0:2379 \

--listen-peer-urls=http://0.0.0.0:2380 \

--auto-compaction-mode=revision \

--auto-compaction-retention=1000 &

child=$!

wait "$child"

env:

- name: ETCD_DATA_DIR

value: /var/lib/etcd

- name: ETCD_HEARTBEAT_INTERVAL

value: 200

- name: ETCD_ELECTION_TIMEOUT

value: 2000

resources:

requests:

cpu: 50m

memory: 1Gi

limits:

memory: 1Gi

volumeMounts:

- name: data

mountPath: /var/lib/etcd

ports:

- name: etcd-server

containerPort: 2380

- name: etcd-client

containerPort: 2379

readinessProbe:

exec:

command:

- /bin/bash

- -c

- /usr/local/bin/etcdctl endpoint health

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 10

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 2Gi

---

apiVersion: v1

kind: Service

metadata:

name: etcd

spec:

clusterIP: None

ports:

- name: etcd-server

port: 2380

- name: etcd-client

port: 2379

selector:

app: etcdWe will cover the essential elements of the above chart as we proceed through the article.

Step 2: Making etcd nodes accessible from the outside

If clients outside the Kubernetes cluster are using etcd, chances are there are some instances to route traffic to the pods. However, in order to bootstrap new nodes, you will need each cluster node to be accessible from the outside on a preset IP address. This is the main challenge when working with a cloud cluster.

In a static Kubernetes cluster, each etcd node is easily accessible: all you need is a service like NodePort, coupled with a hard NodeSelector for the pods. In the cloud, where the pod can move to a new node at any time without its IP address being known in advance, that approach doesn’t apply.

The solution is to create three separate LoadBalancer services — we need three of them because of the “three-headed” etcd cluster. This will result in LBs being automatically provisioned by the cloud provider. Here is a chart example:

---

apiVersion: v1

kind: Service

metadata:

name: etcd-0

annotations:

service.beta.kubernetes.io/aws-load-balancer-type: nlb

service.beta.kubernetes.io/aws-load-balancer-internal: "true"

service.beta.kubernetes.io/aws-load-balancer-subnets: id

spec:

externalTrafficPolicy: Local

loadBalancerSourceRanges:

- 0.0.0.0/0

ports:

- name: etcd-server

port: 2380

- name: etcd-client

port: 2379

selector:

statefulset.kubernetes.io/pod-name: etcd-0

type: LoadBalancer

---

apiVersion: v1

kind: Service

metadata:

name: etcd-1

annotations:

service.beta.kubernetes.io/aws-load-balancer-type: nlb

service.beta.kubernetes.io/aws-load-balancer-internal: "true"

service.beta.kubernetes.io/aws-load-balancer-subnets: id

spec:

externalTrafficPolicy: Local

loadBalancerSourceRanges:

- 0.0.0.0/0

ports:

- name: etcd-server

port: 2380

- name: etcd-client

port: 2379

selector:

statefulset.kubernetes.io/pod-name: etcd-1

type: LoadBalancer

---

apiVersion: v1

kind: Service

metadata:

name: etcd-2

annotations:

service.beta.kubernetes.io/aws-load-balancer-type: nlb

service.beta.kubernetes.io/aws-load-balancer-internal: "true"

service.beta.kubernetes.io/aws-load-balancer-subnets: id

spec:

externalTrafficPolicy: Local

loadBalancerSourceRanges:

- 0.0.0.0/0

ports:

- name: etcd-server

port: 2380

- name: etcd-client

port: 2379

selector:

statefulset.kubernetes.io/pod-name: etcd-2

type: LoadBalancer

The service.beta.kubernetes.io/aws-load-balancer-internal: "true" annotation defines the kind of load balancer (private IP) to be provisioned. The service.beta.kubernetes.io/aws-load-balancer-subnets: id annotation specifies the network to be used by the LB. Most cloud providers have this functionality — only the annotations will differ.

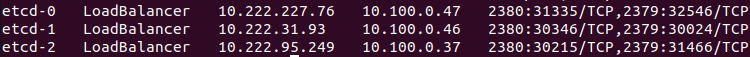

Let’s look at the resources we have in the cluster:

Is the etcd client available?

# telnet 10.100.0.47 2379

Trying 10.100.0.47...

Connected to 10.100.0.47.

Escape character is '^]'.Great: our etcd nodes are now accessible from the outside!

Now let’s create the same services in the new cluster. Note that at this point, we are only creating services in the new K8s cluster, not StatefulSets. (You will have to create a StatefulSet in the new cluster with a name that differs from the one in the existing cluster. The hostname in the pods must be different because we are using it as the etcd node name.)

Later, we will call our StatefulSet in the new cluster etcd-main (you may use any other name you wish), so let’s change the selectors and service names to match this new name:

…

name: etcd-main-0

…

selector:

statefulset.kubernetes.io/pod-name: etcd-main-0

…

On top of that, you have to change the values in the service.beta.kubernetes.io/aws-load-balancer-subnets: id annotation to the corresponding network ID in the new Kubernetes cluster. No changes are required in any other service resources.

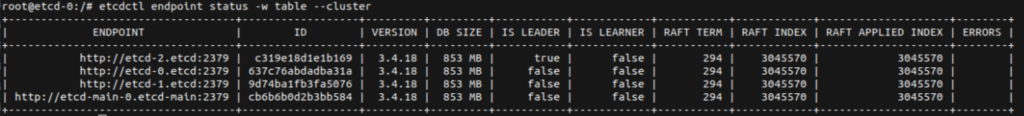

Let’s see what we’ve got here:

Checking availability is not yet worthwhile, since there are no pods to which these services point.

Step 3: DNS magic

So we have made new nodes available at the IP address level. Now it’s time to look at the node IDs in etcd. Below are the startup parameters:

--name=$HOSTNAME \

--initial-advertise-peer-urls=http://$HOSTNAME.etcd:2380 \

--advertise-client-urls=http://$HOSTNAME.etcd:2379 \We won’t elaborate on what each parameter does: you can learn more about them in the official documentation. What is important here is that the name of the node is the pod’s hostname. Nodes connect to each other using a <hostname>.<namespace> FQDN. In order for a new node to work, you have to ensure that the FQDN is accessible from the pods. There are several ways to do that:

- The easiest way is to add static records to pods’

/etc/hostsby editing the StatefulSet. The downside is that it requires the pods to be restarted. - Another way is to resolve names at the kube-dns level. That’s the one we’re going to be using in our case. In the example below, static records are added using the Deckhouse kube-dns module:

spec:

settings:

hosts:

- domain: etcd-main-0

ip: 10.106.0.34

- domain: etcd-main-1

ip: 10.106.0.42

- domain: etcd-main-2

ip: 10.106.0.47

- domain: etcd-main-0.etcd-main

ip: 10.106.0.34

- domain: etcd-main-1.etcd-main

ip: 10.106.0.42

- domain: etcd-main-2.etcd-main

ip: 10.106.0.47Let’s see the pod’s resolve:

# host etcd-main-0

etcd-main-0 has address 10.106.0.34

# host etcd-main-0.etcd-main

etcd-main-0.etcd-main has address 10.106.0.34It’s all okay now! Let’s do the same trick in the new cluster and add the static records for the etcd nodes from the old cluster:

spec:

settings:

hosts:

- domain: etcd-0

ip: 10.100.0.47

- domain: etcd-1

ip: 10.100.0.46

- domain: etcd-2

ip: 10.100.0.37

- domain: etcd-0.etcd

ip: 10.100.0.47

- domain: etcd-1.etcd

ip: 10.100.0.46

- domain: etcd-2.etcd

ip: 10.100.0.37

Well, this magic was quite easy, wasn’t it?

Step 4: Adding new nodes to the etcd cluster

At last, we reached the point when we could add new nodes to the etcd cluster propagating it over our two Kubernetes clusters. To do so, run the command below in any of the active etcd pods:

etcdctl member add etcdt-main-0 --peer-urls=http://etcd-main-0.etcd-main

:2380Since we already know the name of the StatefulSet (etcd-main), we also know the names of the new pods.

Important note: You may ask, “Why not add all the new nodes at once?”. The thing is, we have a 6-member cluster with a quorum of 4. Adding four nodes at once will result in a quorum loss. This will cause the existing nodes to fail.

Now let’s edit the chart for deployment to the new cluster:

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: etcd-main

labels:

app: etcd-main

spec:

serviceName: etcd

selector:

matchLabels:

app: etcd

replicas: 1

template:

metadata:

labels:

app: etcd

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In values:

- etcd

topologyKey: kubernetes.io/hostname

imagePullSecrets:

- name: registrysecret

containers:

- name: etcd

image: quay.io/coreos/etcd:v3.4.18

command:

- sh

args:

- -c

- |

stop_handler() {

>&2 echo "Caught SIGTERM signal!"

kill -TERM "$child"

}

trap stop_handler SIGTERM SIGINT

etcd \

--name=$HOSTNAME \

--initial-advertise-peer-urls=http://$HOSTNAME.etcd-main:2380 \

--initial-cluster-state existing \

--initial-cluster-token=etcd-cortex-prod \

--initial-cluster etcd-main-0=http://etcd-main-0.etcd-main:2380,etcd-0=http://etcd-0.etcd:2380,etcd-1=http://etcd-1.etcd:2380,etcd-2=http://etcd-2.etcd:2380 \

--advertise-client-urls=http://$HOSTNAME.etcd:2379 \

--listen-client-urls=http://0.0.0.0:2379 \

--listen-peer-urls=http://0.0.0.0:2380 \

--auto-compaction-mode=revision \

--auto-compaction-retention=1000 &

child=$!

wait "$child"

env:

- name: ETCD_DATA_DIR

value: /var/lib/etcd

- name: ETCD_HEARTBEAT_INTERVAL

value: 200

- name: ETCD_ELECTION_TIMEOUT

value: 2000

resources:

requests:

cpu: 50m

memory: 1Gi

limits:

memory: 1Gi

volumeMounts:

- name: data

mountPath: /var/lib/etcd

ports:

- name: etcd-server

containerPort: 2380

- name: etcd-client

containerPort: 2379

readinessProbe:

exec:

command:

- /bin/bash

- -c

- /usr/local/bin/etcdctl endpoint health

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 10

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 2Gi

In it, both the name and the start command have changed. Let’s look at the latter in greater detail:

- The command now has the

--initial-cluster-state existingflag. It indicates that members of the existing cluster are being bootstrapped instead of creating a new one (see the documentation for details). - The

--initial-advertise-peer-urlsparameter is different because the name of the StatefulSet has changed. - Most importantly, the

--initial-clusterflag has changed. It lists all the existing cluster members, including the newetcd-main-0node.

The nodes are added one by one, so the replicas key must be set to 1 for the first deployment.

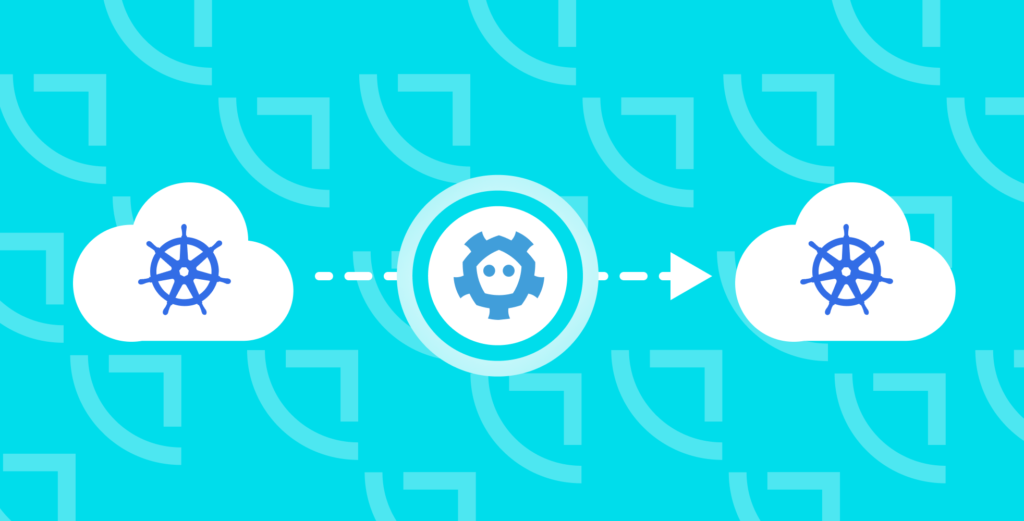

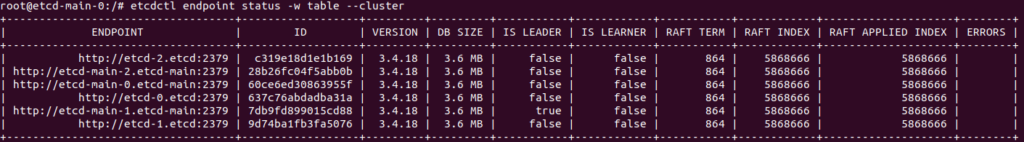

Verify that the new node has successfully joined the cluster (etcdctl endpoint status):

Add two more nodes (the steps are exactly the same as those described above):

- Add a new node to the cluster using the

etcdctl member addcommand. - Edit the new StatefulSet: add one replica and change the

--initial-clusterkey to add a new node. - Wait for the node to successfully join the etcd cluster.

Note that the kubectl scale statefulset command cannot be used because you have to change the parameter in the start command of the new StatefulSet as well as change the replica count.

Check the cluster status:

Everything looks good. Now you can switch the etcd leader to one of the new nodes using etcdctl:

etcdctl move-leader 60ce6ed30863955f --endpoints=etcd-0:2379,etcd-1:2379,etcd-2:2379,etcd-main-0:2379,etcd-main-1:2379,etcd-main-2:2379Step 5: Rerouting etcd clients

Now you need to reroute the etcd clients to the new endpoints.

In our case, the client was a PostgreSQL cluster running Patroni. A detailed description of relevant changes in its configuration would be beyond the scope of this article.

Step 6: Deleting the old nodes from the etcd cluster

It’s time to delete the old nodes. Keep in mind that these should be deleted one at a time to avoid losing the cluster quorum. Let’s look at the process step by step:

- Delete one of the old pods by scaling the StatefulSet in the old K8s cluster:

kubectl scale sts etcd –-replicas=2 - Delete a member from the etcd cluster:

etcdctl member remove e93f626220dffb --endpoints=etcd-0:2379,etcd-1:2379,etcd-main-0:2379,etcd-main-1:2379,etcd-main-2:2379 - Check the status of the etcd cluster:

etcdctl endpoint health - Repeat the process for the remaining nodes.

We recommend keeping the Persistent Volumes of old pods, if possible. They may come in handy should you need to roll back to the original state.

After removing all the old nodes, edit the etcd command in the StatefulSet in the new Kubernetes cluster (remove the old nodes from it):

…

--initial-cluster etcd-main-0=http://etcd-main-0.etcd-main:2380,etcd-main-1=http://etcd-main-1.etcd-main:2380,etcd-main-2=http://etcd-main-2.etcd-main:2380

…Step 7: Deleting the remaining etcd resources in the old Kubernetes cluster

Once the new etcd cluster “settles down” and you are confident that it works as expected, delete the resources left over from the old etcd cluster (Persistent Volumes, Services, etc.). With that, your migration is complete — congratulations!

Conclusion

The method of migrating etcd between Kubernetes cloud clusters described above is not the most obvious one. However, it can help move etcd from one cluster to another rather quickly and without any downtime.

Comments