A modern approach to operation solves many challenges that businesses face today. Containers and orchestrators facilitate scaling of projects of any complexity, simplify the release of new versions, making them more reliable… However, they also pose additional challenges for developers. The common programmer primarily cares about its code: the architecture, the quality, performance, and even elegance. He is far less concerned about deploying it to Kubernetes as well as testing and debugging it there after making some even the smallest changes. That’s why we see a lot of new Kubernetes tools that help even the most old-school developers solve their problems while allowing them to focus on the global picture.

So here is a short review of various tools that make life easier for a common developer that has its code running in the pods of a Kubernetes cluster.

Basic helpers (tools)

Kubectl-debug

- Idea: Add your container to the pod to see what’s happening inside

- Resources: GitHub

- GH brief stats: 782 stars, 57 commits, 12contributors

- Implemented in: Go

- License: Apache License 2.0

This kubectl plugin allows you to run an additional container in a pod of your interest. The new container will share namespaces with the target container(s). With it, you can debug all aspects of pod’s operation: check the network, listen to network traffic, diagnose the process that you are interested in via strace, etc.

You can also enter the process container by executing chroot /proc/PID/root — this option is very convenient when you need a root shell in the container whose manifest has securityContext.runAs set.

The tool is simple and efficient and might be quite useful for developers.

P.S. It’s also worth mentioning that the latest Kubernetes release — v1.16 — has brought the “ephemeral containers” feature (currently in alpha) that implements pretty similar functions to the core. Thus, it’s expected this plugin will be replaced by the native command (kubectl debug) soon.

Telepresence

- Idea: Transfer an application to a local machine for developing and debugging

- Resources: Homepage; Docs; GitHub

- GH brief stats: 2213 stars, 2735 commits, 36 contributors

- Implemented in: Python

- License: Apache License 2.0

Telepresence allows to run an application container on a local user machine. In this case, all traffic from the computer to the cluster and vice versa goes through the proxy. Such an approach allows for fully local development via modifying files in your favorite IDE: you will see results immediately.

The advantages of running containers locally are many: the convenience of work, instant results, ability to debug an application habitually. However, there are some disadvantages: high requirements for stability and speed of the network connection which is especially evident for high-RPS and heavy-traffic applications. Also, Telepresence has some issues with volume mounts under Windows, and this could be a major obstacle for developers accustomed to this OS.

P.S. In general, we advice Telepresence to some of our customers (developers) and they are pretty happy with that. This tool is hosted among CNCF Sandbox projects. As a popular alternative to Telepresence, you might also consider Skaffold described below.

Ksync

- Idea: Almost instant synchronization of code with the container in the cluster

- Resources: Docs; GitHub

- GH brief stats: 595 stars, 364 commits, 11 contributors

- Implemented in: Go

- License: Apache License 2.0

Ksync helps developers to sync files between a local folder and a folder in the container of a Kubernetes cluster. This tool is perfect for developers that use scripting languages whose major hurdle is delivering code into a running container. Ksync solves this problem.

During initialization with ksync init, the DaemonSet controller is created in the cluster. It helps to monitor the state of the filesystem of a selected container. The developer then runs ksync watch on a local machine. It watches config files and launches syncthing to manage the actual file syncing to the cluster.

Finally, you need to select folders for syncing to the cluster. For example, the following command:

ksync create --name=myproject --namespace=test --selector=app=backend --container=php --reload=false /home/user/myproject/ /var/www/myproject/… will create a watcher called myproject. It will look for a pod with an app=backend label and will try to sync the /home/user/myproject/ local directory to the /var/www/myproject/ directory in the php container.

Here is our experience with ksync (and some useful suggestions):

- Ksync only supports

overlay2as a storage driver for Docker. This tool is not compatible with any other drivers. - When using Windows as a client OS, the correct operation of filesystem watcher isn’t guaranteed. This bug manifests itself when working with large folders (with a large number of files and folders inside). We have opened a corresponding issue in the syncthing project, however, there has been no progress as of now (since the beginning of July).

- Use the

.stignorefile to specify path or file patterns that should not be synchronized to other devices (e.g.app/cachefolders,.git). - By default, ksync will reload the container in response to changes in files. This behavior is great for Node.js but completely unnecessary in the case of PHP. It is better to turn off

opcacheand use the flag--reload=false. - You can always adjust the configuration in the

$HOME/.ksync/ksync.yaml.

Squash

- Idea: Debugging processes while they run in the cluster

- Resources: Homepage; Docs; GitHub

- GH brief stats: 1193 stars, 280 commits, 23 contributors

- Implemented in: Go

- License: Apache License 2.0

This tool is intended for debugging processes directly inside the pods. It is easy to use and allows you to interactively select the desired debugger (see below) and namespace/pod of the process of interest. Currently, Squash has support for:

- delve — for Go-based applications;

- GDB — via target remote + port forwarding;

- JWDP port forwarding for debugging Java applications.

Considering IDEs, there is support for VS Code (via this extension), however, plans for the current year (2019) include support for Eclipse and IntelliJ. (README in GitHub says “We will be updating our Intellij extension in early 2019,” thus there’s no confidence when/whether it’s going to be actually done.)

Squash runs a privileged container on the same node with the target process, that’s why you first have to learn more about the capabilities of Secure Mode to prevent security problems.

Complex solutions

Now it’s time to turn our attention to a “heavy artillery” — bigger projects designed to satisfy complicated developers’ demands.

werf

- Idea: Build your Docker images efficiently and glue your CI with Kubernetes

- Resources: Homepage; Docs; GitHub

- GH brief stats: 1233 stars, 5564 commits, 17 contributors

- Implemented in: Go

- License: Apache License 2.0

This tool is briefly described as “a missing part of a CI/CD system” and aims to improve the continuous delivery process complementing your existing CI system with building & deploying features. It implies a GitOps pattern using a Git repository as the single source of truth which should contain the full configuration of your application including instructions to build its images and infrastructure it requires to run (via Helm charts for Kubernetes).

With werf, you can build as many Docker images as needed for a single project as well as build one image from config on the top of another image from the same config. Building is available using Dockerfile or custom builder instructions. This custom builder offers many advanced features such as incremental rebuilds (based on Git history), using Ansible tasks or Shell scripts for building, sharing a common cache between builds, reducing image size.

Your images can be published in one or several Docker registries following different image tagging strategies. Registries are then cleaned via customizable policies and images still used in Kubernetes clusters are kept.

The major features of deploying these images to Kubernetes include:

- Checking whether an application is deployed correctly: tracking all application resources status, controlling of resources readiness and of the deployment process with annotations. Logging and error reporting.

- Failing CI pipeline fast (i.e. without a need to wait for a full timeout) when problem detected.

- Full compatibility with Helm 2.

- Ability to limit deploy user access using RBAC definition.

- Parallel deploys on a single host (using file locks).

- Continuous delivery of new images tagged by the same name (by

git branchfor example).

P.S. There is another project associated with werf — kubedog — as it’s used internally to watch Kubernetes resources. However you can benefit from it in your CI/CD pipelines without werf as well.

DevSpace

- Idea: If you want to start using Kubernetes without a deep dive into its internals

- Resources: Homepage; Docs; GitHub

- GH brief stats: 677 stars, 2349 commits, 16 contributors

- Implemented in: Go

- License: Apache License 2.0

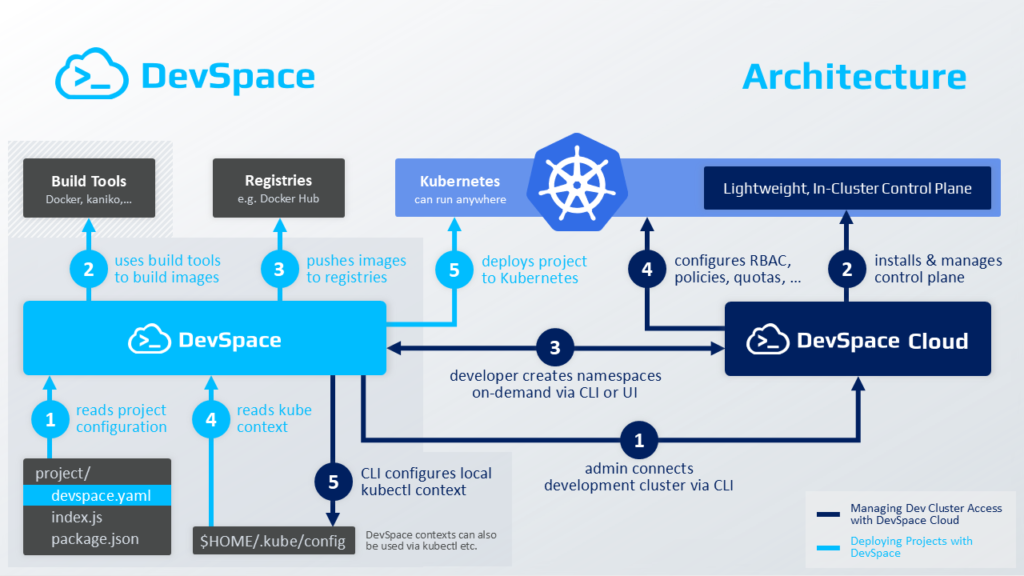

This solution is developed by the company with the same name, which also provides managed Kubernetes clusters for team-based development. Originally DevSpace was designed for commercial clusters, but now it works perfectly with any others.

When you run the devspace init command in the project directory, you will be prompted to (in interactive mode):

- select a running Kubernetes cluster;

- use the existing

Dockerfile(or generate a new one) to create a container; - select a repository for storing container images, etc.

After all these preparatory steps, you can start developing by running the devspace dev command. It will build the container, push it to the repository, roll out deployment to the cluster, setup port forwarding, and synchronize your local workspace and the container.

Also, you will be presented with an opportunity to enter your container via current terminal. Feel free to use this feature since the container starts with a sleep command (while to actually test an application you will need to run it manually).

Finally, devspace deploy will deploy an application and related infrastructure to the cluster, and then everything will start running “for real”.

Project configuration is stored in the devspace.yaml file. Aside from settings for a development environment, you may find there an infrastructure description similar to Kubernetes manifests (yet highly simplified).

Furthermore, you can easily add a predefined component (e.g., MySQL DBMS) or a Helm chart to the project. You can learn more about this feature in the documentation since it’s pretty straightforward.

P.S. This Monday, the major update to DevSpace — v4.0 — has been released.

Skaffold

- Idea: Fast and highly optimized development tool that does not require a cluster-side component

- Resources: Homepage; Docs; GitHub

- GH brief stats: 7629 stars, 4657 commits, 144 contributors

- Implemented in: Go

- License: Apache License 2.0

This tool made in Google claims to cover all the requirements of a developer whose code will be run in a Kubernetes cluster. It has a steeper learning curve than DevSpace: Skaffold isn’t interactive at all, it will neither detect your programming language nor generate a Dockerfile for you.

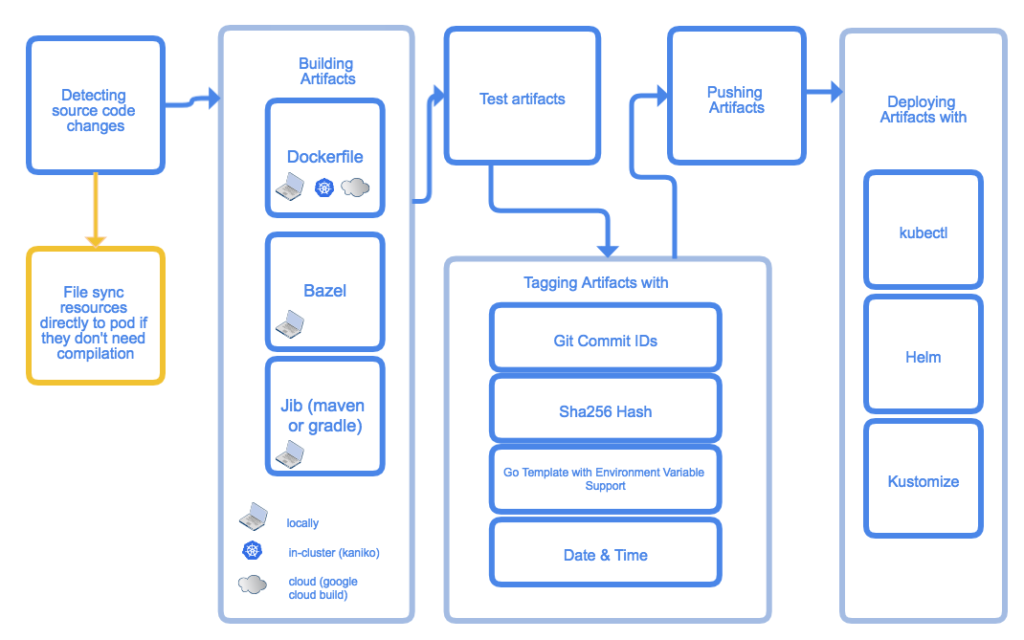

However, if it doesn’t scare you, here is a quite impressive shortlist of what Skaffold can do. It can:

- Detect changes in your source code.

- Synchronize them with a container in the pod (if it does not require building).

- Build containers having a source code (in the case of interpreted programming language) and compile artifacts and pack them into containers.

- Automatically test the resulting images using a container-structure-test.

- Tag images and push them to Docker Registry.

- Deploy an application to the cluster using kubectl, Helm or kustomize.

- Forward ports.

- Debug applications that are written in Java, Node.js, and Python.

Various Skaffold workflows are configured declaratively in the skaffold.yaml file. You can define several profiles for every project, where each profile sets specific parameters for the building and deploying stages. For example, you can configure Skaffold to use any basic image (of your choice) during development while change it to a minimal image for staging and production (plus use securityContext for containers or redefine cluster where the application will be deployed).

Docker containers can be built locally or remotely, via Google Cloud Build or in the cluster via Kaniko. Bazel and Jib Maven/Gradle are also supported. Skaffold supports many tagging strategies: using git commit hashes, date/time values, SHA256 hashes of contents, etc.

Particularly noteworthy is the ability of Skaffold to test containers. The aforementioned container-structure-test framework provides several methods of validation:

- Command testing (running commands in the container and monitoring their exit statuses and expected output in

stdoutandstderr). - File existence testing (testing for the existence of files in the container and matching their attributes to specified ones).

- File content testing (via regular expressions).

- Image metadata testing (ensures the correctness of various fields:

ENV,ENTRYPOINT,VOLUMES, etc.). - License compatibility testing.

However, syncing of files with the container is implemented in a sub-optimal way: Skaffold simply creates an archive containing source files, copies and unpacks it in the container (where the tar utility has to be installed). So if your primary objective is code syncing, then you are better to choose a more specialized solution (like ksync).

Key stages of Skaffold operation

Overall, this tool’s lack of abstraction from Kubernetes manifests and interactivity makes it quite difficult to master, though this disadvantage is somewhat compensated by greater freedom of actions.

UPDATE (20/11/2019): We have published more detailed article on Skaffold here.

Garden

- Idea: Automate repetitive parts of your workflow

- Resources: Homepage; Docs; GitHub

- GH brief stats: 1165 stars, 2064 commits, 18 contributors

- Implemented in: TypeScript (In the future, the project will be split into several components. Some of them will be written in Go. There will also be an SDK for creating add-ons in TypeScript/JavaScript and Go)

- License: Apache License 2.0

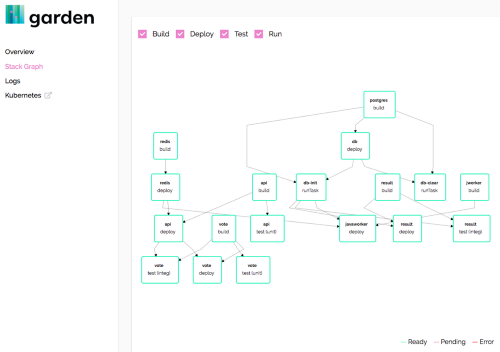

Like some other tools mentioned, Garden aims to automate the process of delivery of application code to the K8s cluster. To do this, you first have to describe the project structure in a YAML file, and then run the garden dev command. It would do all the magic:

- Build containers with various parts of the project;

- Run integration and unit tests defined in the project;

- Deploy all components of the project to the cluster;

- Restart the pipeline in response to source code changes.

This tool is best for a team-based shared development in a remote cluster. In this situation, you can use a build cache for some already done stages, which significantly accelerates the whole process, since Garden will be able to reuse the cached results.

A project module can be a container, a Maven container, a Helm chart, a manifest for kubectl apply or even an OpenFaaS function. You can pull any module from the remote Git repository. A module may or may not define services, tasks, and tests. Services and tasks may have dependencies, so you can define the sequence of deployment of some service, reorder tasks and tests.

Garden provides a beautiful dashboard (experimental feature) that gives you a full overview of your project: its components, build sequence, the order of tasks and tests, their relationship and dependencies. Also, you can view logs of all components right in the browser, check the HTTP output of the selected component (ingress resource should be defined for it) and more.

This tool also has a hot-reload mode, that synchronizes code changes with the container in the cluster while significantly accelerating the application debugging process. Garden has good documentation and an extensive set of examples which help the user to get comfortable with the tool and start using it.

P.S. We can also recommend our readers this rather amusing article written by one of its developers.

Conclusion

Surely, the list of tools for developing and debugging applications in Kubernetes isn’t limited to those described in this article. There are numerous very useful and practical tools worthy of a separate article or, at the very least, a mention. Please, share the tools you use, problems you have encountered, and possible solutions!

Comments